Web scraping is the process of automatically extracting publicly available information from websites and converting it into structured data.

In plain language:

Scraping turns the web into a spreadsheet.

Why this matter? Public web data is now one of the largest and fastest-growing sources of business intelligence.

But this isn’t just a theoretical guide.

Includes exclusive 2025 Data:

We analyzed billions of scraping tasks to show you exactly what data is being collected and the #1 reason why 35% of scraping projects fail.

Let’s dive in.

How Does Web Scraping Works

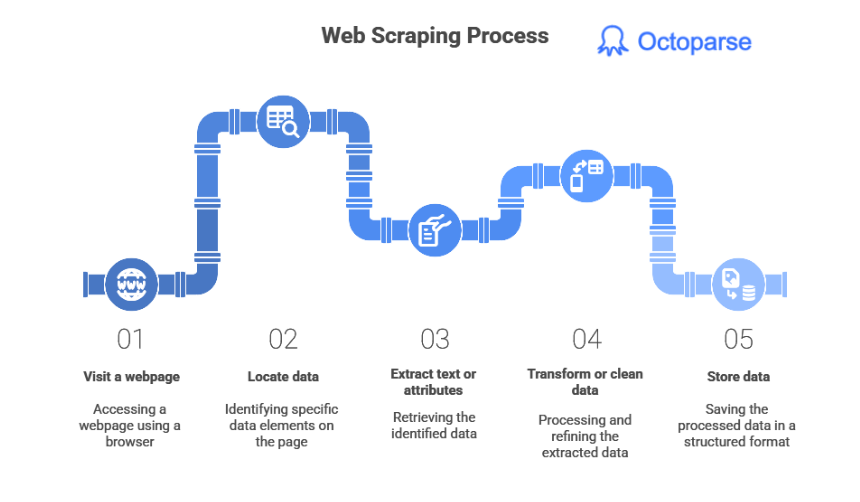

At a high level, web scraping consists of five steps:

- Visit a webpage (like a browser)

- Locate data (product titles, prices, contacts, etc.)

- Extract text or attributes

- Transform or clean data

- Store data in a structured format

Every web scraper — from a simple browser extension to a distributed crawler hitting 500,000 pages an hour — follows the same conceptual pipeline:

1. Fetch a webpage

The scraper behaves like a browser or HTTP client.

Request sent → Page HTML returned.

2. Parse the document (DOM)

HTML becomes a structured tree.

Elements like <div>, <span>, <li> become selectable nodes.

3. Select target elements

Using:

- CSS selectors

- XPath

- Pattern matching

- Attribute filtering

Example://div[@class='product-title'] or .price span

4. Extract values

Text, numbers, links, attributes (src, href, alt), metadata, or JSON embedded in the page.

5. Format the output

Into:

- CSV

- Excel

- JSON

- SQL-ready tables

- Direct-to-API pipelines

6. (Optional) Automate or scale

Scheduling, pagination, proxy rotation, anti-bot handling, error retries.

Web scraping looks simple on the surface, but the difference between hobby-level and enterprise-level scraping is massive. That’s where we’ll go deeper.

What Are The Most Common Uses of Web Scraping

Every industry runs on signals that do not exist in APIs:

- E-commerce: price changes, stock status, discount validity

- Travel: ticket volatility, fare classes, availability windows

- Real estate: listing history, occupancy patterns

- https://www.octoparse.com/template/categories/jobs& HR: job postings, skill demand, talent gaps

- AI/ML: training data, labeled samples, document structures

- Finance: sentiment, corporate disclosures, market intelligence

The internet did not standardize its data.

Web scraping is the universal adapter.

This is why:

- Governments use scraping to monitor compliance

- Researchers use scraping to track misinformation

- Retailers use scraping to benchmark competitors

- Brands use scraping to track successful resellling

- AI companies use scraping to build datasets

Scraping exists because the web is the biggest database on Earth, but not a structured one.

How Many Types of Web Scrapers Are There?

TL;DR:

There are four main categories:

- No-Code Web Scrapers: Tools like Octoparse let you extract data with clicks — no coding.

- Browser Extensions: Lightweight scrapers like Chat4Data for single-page or small-volume tasks.

- Programming Libraries: Python options (BeautifulSoup, Scrapy, Selenium). They are best for custom pipelines.

What About API?

What is API: Official data access channels provided by websites, designed specifically for extracting their data programmatically.

Best for:

- Any situation where the website offers an API

- Production systems requiring stability and legal clarity

- Long-term data collection projects

- Situations where you need official support

How they work: You register for an API key, read the documentation, send structured requests to API endpoints, and receive clean data in JSON or XML format.

Pros:

- Legally clear and officially supported

- Stable data format that rarely changes

- Better performance (designed for data access)

- Clear rate limits and usage terms

- No risk of being blocked as a “bot”

- Official documentation and support

Cons:

- May cost money (freemium tiers, then paid plans)

- Rate limits may be restrictive (100 requests/hour)

- Doesn’t always provide all the data you need

- Requires approval process for some APIs

- May have strict usage restrictions

Common examples:

- Twitter API: Access tweets, user profiles, trends

- Google Maps API: Location data, reviews, business information

- LinkedIn API: Professional profiles, company data (very restricted)

- Amazon Product Advertising API: Product details, prices, reviews

When APIs aren’t enough: Many websites offer APIs but limit what data you can access or charge premium prices.

For example, LinkedIn’s official APIs expose only a small subset of profile fields (typically basic identity and current role) to most integrations and restrict storage, exporting, and combining of that data, even though the website shows far more detailed history, skills, and activity for the same profiles.

For more APIs in web scraping, check our article on the best web scraping APls for real time data.

1. No-Code Scrapers (Like Octoparse)

What they are: Visual tools where you point and click to select data. No programming needed.

Best for:

- When no API exists or the API is too limited/expensive

- Marketers, analysts, and researchers without coding skills

- Getting data quickly (hours, not weeks)

- Teams where multiple people need to run scrapers

- Situations where pre-built templates exist for popular websites

How they work: You click on the data you want (like prices or phone numbers), the tool figures out how to extract it, and you export to Excel or CSV.

Pros:

- Start scraping immediately without coding

- Built-in features for common challenges (pagination, logins, CAPTCHAs)

- Cloud scheduling (run scrapers automatically every day)

- Templates for popular sites (Amazon, https://www.octoparse.com/template/collection/linkedin-scraper, https://www.octoparse.com/template/collection/yellow-pages-scraper)

2. Browser Extensions

What they are: Small add-ons for Chrome or Firefox that scrape data from pages you’re viewing.

Best for:

- When no API exists and you only need data once

- Quick, one-time data collection (50-200 items)

- Testing if data is scrapable before building a full scraper

- Personal research projects

- People who need results right now without setup

How they work: Install the extension, click it while viewing a page, select what to extract, copy to clipboard or download as CSV.

Pros:

- Free or very cheap

- No account or installation required

- Works instantly

Cons:

- Limited to small amounts of data

- No automation or scheduling

- Most can’t handle anti-bot systems

Example tools: Chat4Data, Web Scraper (Chrome extension), Instant Data Scraper.

3. Programming Libraries

What they are: Code libraries in Python, JavaScript, or other languages that developers use to build custom scrapers.

Best for:

- When APIs don’t exist and you need custom extraction logic

- Developers comfortable writing and maintaining code

- Projects requiring complex transformations during extraction

- Integration with existing software systems

- Situations where you need complete control

How they work: You write scripts that fetch pages, parse HTML, select elements, extract data, and save results—all in code.

Pros:

- Complete control over every aspect of scraping

- Free and open source

- Can handle any level of complexity with enough effort

- Integrates directly with data analysis tools (pandas, databases)

- Can combine API calls with web scraping in same script

Cons:

- Requires programming knowledge (Python, JavaScript, etc.)

- Time-intensive to build and maintain

- You handle all anti-bot issues yourself

- No visual interface or templates

Popular options:

- Python: BeautifulSoup, Scrapy, Selenium, Playwright

- JavaScript: Puppeteer, Cheerio

- Ruby: Nokogiri

4. Enterprise Web Scraping Services

What they are: Professional-grade services that handle massive scraping operations with guaranteed reliability.

Best for:

- When APIs don’t exist or are prohibitively expensive at scale

- Companies scraping millions of pages monthly

- Mission-critical data pipelines (if scraping stops, business suffers)

- Organizations needing global proxy networks

- Teams with significant budgets ($5,000-$50,000+/month)

What they include:

- Premium proxy networks (residential IPs that look like real users)

- Professional support teams

- Legal and compliance assistance

- Infrastructure that handles massive scale

- Service level agreements (guaranteed uptime)

Pros:

- Handles scale most tools can’t reach

- Professional support when things break

- Advanced anti-bot capabilities

Cons:

- Expensive (often $500-$5,000+ monthly minimum)

- Overkill for small projects

- Still requires technical knowledge

Example platforms: Octoparse, Bright Data, Oxylabs

How to Choose the Right Web Scraping Method

Start here: Does an official API exist?

- ✅ Yes, and it provides the data you need: Use the API (even if it costs money)

- ✅ Yes, but it’s too expensive or limited: Evaluate if web scraping is worth the trade-offs

- ❌ No API exists: Move to web scraping options below

Can you combine methods?

Yes! Many projects use APIs for some data and web scraping for additional information the API doesn’t provide.

Example: Use Twitter’s API to get tweet text and metadata, then scrape user profile pages for additional bio information the API doesn’t include.

Challenges in Web Scraping

Scraping is powerful, but not always simple. Here are the core challenges:

- Anti-Bot Systems

- Modern sites use aggressive blocking mechanisms.

- Changing Website Structures

- HTML changes break scrapers.

- Data Quality Issues

- Missing fields, inconsistent formatting, duplicates.

- Scalability

Collecting small datasets is easy; millions of rows require strategy.

👉 For deeper explanations, read “10 Myths About Web Scraping”.

Best Practices for Effective Web Scraping

These are practical, universal, non-tool-specific guidelines.

- Start with the smallest possible dataset

Validate structure early.

- Build in resilience

Use XPaths/CSS selectors that don’t break.

- Clean data upstream

Fix formatting at the extraction point.

- Control your request rate

Respect server load to avoid blocks.

- Automate the pipeline

Use scheduling, notifications, and auto-renew workflows.

What Octoparse Learned From 10+ Years of Scraping

What Are The Most Scraped Data Types

Top categories consistently include:

- Product listings (e-commerce)

- Reviews (Amazon, Yelp, hotel platforms)

- Job postings

- Real estate listings

- Supplier & business directories

- Academic / research metadata

These six categories represent roughly 68–74% of recurring extractions.

You may also be interested in:

1. How to Scrape eBay Listings Without Coding

2. How to Scrape Google Shopping for Price and Product Data

3. No-Coding Steps to Scrape Amazon Product Data

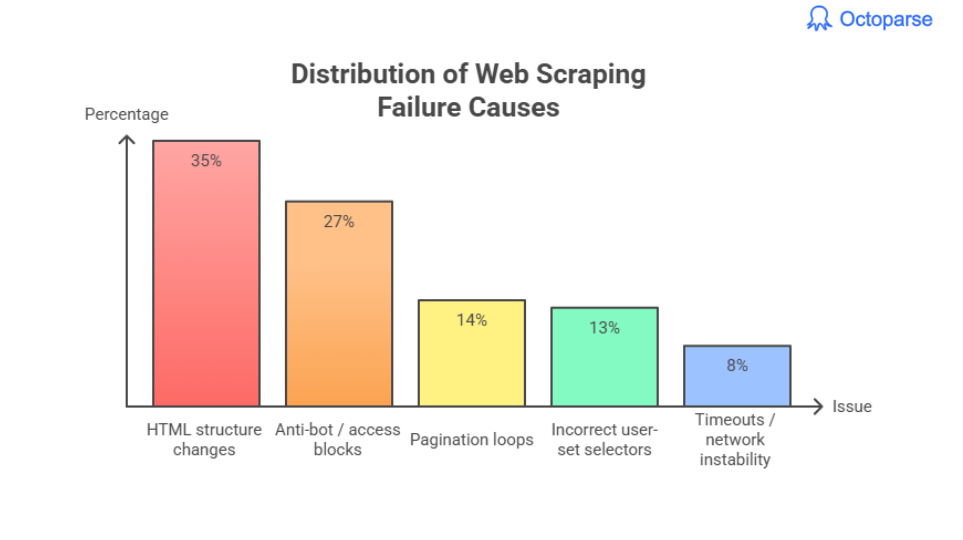

What Are The Biggest Web Scraping Failure Causes

Across all recorded errors:

- HTML structure changes → ~35%

- Anti-bot / access blocks → ~27%

- Pagination loops → ~14%

- Incorrect user-set selectors → ~13%

- Timeouts / network instability → ~8%

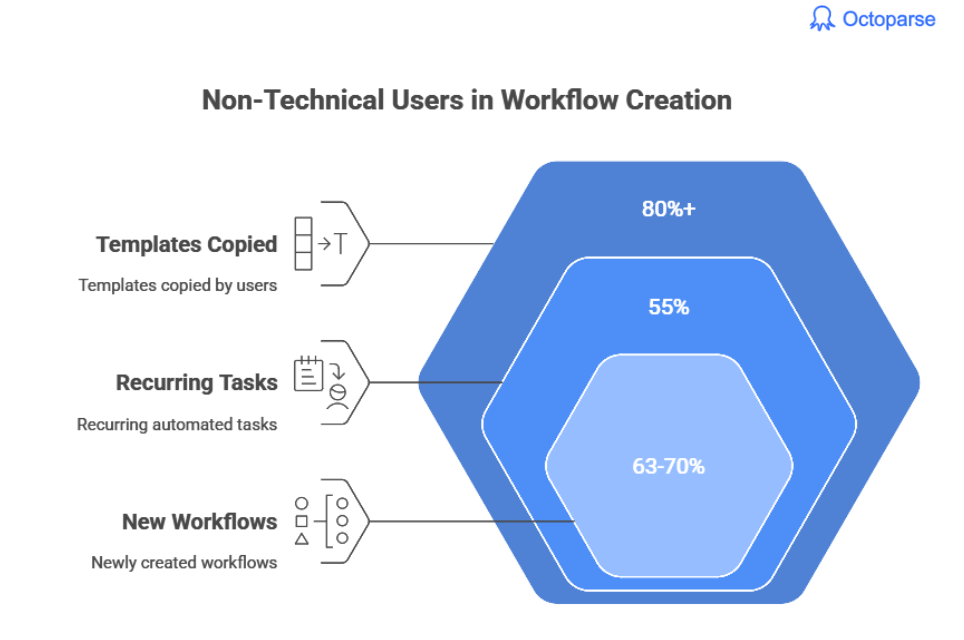

No-Code Scraping Has Overtaken Coding

In 2024–2025, non-technical users account for an estimated:

- 63–70% of all newly created workflows

- >55% of recurring automated tasks

- 80%+ of templates copied by users

This reflects a shift toward business teams owning their data pipelines.

Upstream Data Cleaning Cuts Work by ~40%

Teams that clean data during extraction reduce manual cleanup time dramatically.

Common high-value transformations:

- Price normalization

- Unit conversions

- Text stripping

- Category tagging

- Date formatting

Most users underestimate the value of extraction-level sanitation.

Building a Scraping Operation That Actually Works

A. Choose the Right Tool for the Job

When to use no-code:

- Business workflows

- Monitoring tasks

- Non-engineering teams

- Need for templates

- Quick turnarounds

When to use Python:

- Custom logic

- Complex anti-bot solutions

- Full control over pipeline

- Integrations with ML systems

When to go enterprise-level:

- 100k+ pages/day

- Global proxy rotation

- SLA requirements

- Data engineering oversight

B. Evaluate These 7 Capabilities

- Selector intelligence

Auto-detection should handle most layouts. - Anti-bot sophistication

Headless browsers, fingerprinting, dynamic pacing. - Workflow monitoring

Alerts, logs, break detection. - Pagination handling

True multi-pattern support. - Data cleaning features

Normalize at extraction. - Scheduling & automation

Hourly/daily/weekly pipelines. - Scalability

Ability to run multiple tasks concurrently without IP blocks.

C. Build a Durable Data Pipeline

A real web scraping workflow looks like this:

- Discovery

Identify URLs / categories / pages. - Extraction

Scraper logic, selectors, browser environment. - Verification

Spot-check 20–50 rows before mass extraction. - Cleaning

Normalization, categorization, deduping. - Storage

CSV → Excel → DB → API → BI tool. - Automation

Scheduling, notifications, retries. - Maintenance

Fixing breakages, updating workflows, evolving selectors.

This is where the ROI is — not in the first 5 minutes of building a scraper, but in the months of reliable reuse.

What to Read Next

Choose your path:

If you’re new to scraping →

👉 “10 Myths About Web Scraping”

👉 Beginner tutorials

If you’re comparing tools →

👉 “Free Web Scrapers You Can’t Miss”

If you’re scraping for a business workflow →

👉 Lead generation scraping workflows

👉 How to scrape e-commerce data

If you need operational reliability →