There are many web scraping tools around, such as famous Python libraries Beautiful Soup, lxml, parsel, etc. Most data scientists and developers, however, are likely to name Scrapy as the tool that enjoys the greatest popularity out of all of them.

Read on, and you’ll learn more about Scrapy web scraping also its alternatives and when you should use what tool to extract data based on your needs and skills.

What is Scrapy Web Scraping

Scrapy is a fast high-level web crawling and scraping framework for Python. It’s open-source that allows you to crawl websites quickly and simply. You can also download, parse, and store scraped data on your devices.

Scrapy has a powerful ability to send asynchronous requests. That means you can scrape data from various pages at once. Also, it offers CSS and XPath selectors to pick desired data categories to extract. According to the Scrapy tutorial, you can build a spider yourself in 5 simple steps.

Pros and Cons of Scrapy

Scrapy is well-accepted by many users who consider it as one of the greatest web crawlers due to its strengths in an all-in-one framework, flexibility in extensions, and ability to post-process data.

All-in-one framework

If you google “web scraping Python”, you’ll get a list of results. Compared with the Python libraries, such as Beautiful Soup, Scrapy provides a complete framework for data extraction. You can get data Without using any additional apps, programs, or parsers. Thus, scraping data using Scrapy can be more time-consuming and convenient. In contrast, while using the majority of Python libraries for data collection, you might need to use the additional request library.

Flexibility in extensions

Scrapy is easily extensible that let you can plug new functionality without having to touch the core. It provides you with some built-in extensions for general purposes. For example, you’ll receive a notification e-mail when the scraper exceeds a certain value with the memory usage extension. Additionally, the close spider extension allows you to close the spider automatically when some conditions are met. These extensions can improve your efficiency in data extraction. Of course, if you want, you can write your own extension for particular needs.

Data post-processing

When we visit a website, we occasionally find that the amount of information available exceeds our needs. Such an issue might also arise while using Python to scrape data. You can extract almost any data from pages even though it might make the data file dirty. What Scrapy chooses to do is offer an option to activate plugins to post-process scraped data before exporting it to a data file. For instance, if the desired data contains arbitrary commas, you can process the source data in your preferred format.

However, everything comes with pros and cons. Scrapy shows its strengths in an all-in-one framework, but we cannot ignore it is still a “high-level” tool. Which means it is not that beginner-friendly. For example, its installation process is complicated. Additionally, it only provides beginners with light documentation. It could be a big challenge to learn and utilize this program if you have no prior knowledge of Python or data extraction.

Scrapy Alternatives in Python

Beautiful Soup

Beautiful Soup is one of the most used Python libraries for web scraping. You can get elements including a list of images or videos out of websites with this tool. In comparison to Scrapy, Beautiful Soup is simpler to use and understand. It offers users helpful documentation for independent learning. But it’s only a parsing library with less functionality that is good at processing simple projects. You’ll need other libraries and dependencies if you wish to use this library to create a web scraper.

lxml

Many developers prefer to create their own parsers based on their unique objectives. Thus, lxml, one of the quickest and most feature-rich Python libraries for processing XML and HTML, becomes a good option for them. It’s more powerful and faster in parsing large documents and offers CSS and XPath selectors to locate specific elements within HTML texts. With lxml, you can also produce HTML documents and read existing ones. It stands out from many Python parse libraries due to its ease of programming and performance. However, using it also requires coding knowledge and experience as well.

urllib3

With over 70,000,000 downloads on PyPI per week, urllib3 is a popular Python web scraping library. It’s similar to the requests library in Python and offers a simple user interface to retrieve URLs. You can execute any GET and POST requests to parse data using it. Like the tools mentioned above, urllib3 needs coding skills and has limited features. For users without coding experience, it might be a little more challenging.

Reading this article about top 10 open-source date scraping tools to find more alternatives of Scrapy.

Easy Scrapy Alternative to Scrape Any Websites Without Coding

Whether you’ve tired of talking about Python or simply need a simple-to-use yet effective web scraping tool, Octoparse should be a good option. Octoparse is a web scraping tool with no coding required. You can extract data with several clicks by following the steps below.

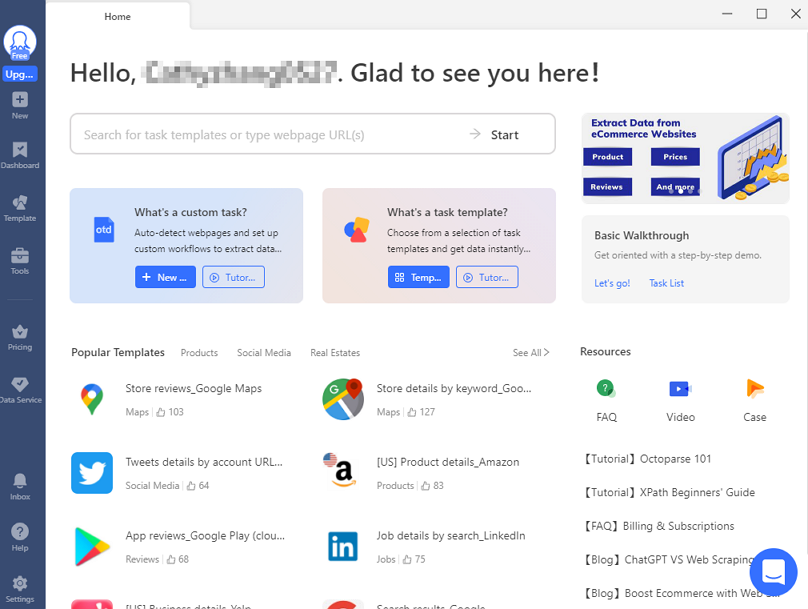

Before starting your Octoparse data-scraping journey, you can download and install Octoparse on your device. When you open the software for the first time, please sign up for a free account to log in.

Step 1: Create a new task

Having a page you want to scrape data from, you can enter or copy and paste its URL into the search bar on Octoparse. Next, click “Start” to create a new task and let the page load in Octoparse’s built-in browser.

Step 2: Select data with auto-detection

Wait until the page has finished loading, and click “Auto-detect webpage data” in the Tips panel. After scanning the entire page, Octoparse will present a list of possibilities for data fields that can be extracted. These fields will be highlighted on the page. You can also preview them at the bottom.

In this procedure, Octoparse may occasionally make a “false” assumption. So it provides you with several methods to get wanted data. For instance, you can manually choose data by clicking the data on the page. Or you can use XPath to locate data.

Step 3: Build the workflow

Click “Create workflow” once all desired data fields have been chosen. Then a workflow will show up on the right-hand side that outlines every stage of the scraper. You can click on each step to preview their actions and check if each step works properly.

Step 4: Run the task and export the data

Double-check all the details (including selected data fields and the workflow), then click “Run” to launch the scraper. You need to pick running the task on your locate device or on the cloud servers at this step. Go ahead to run in the Could if you’re processing a big project while running on your device is more suitable for quick runs. After the extraction process is complete, you can export the data as an Excel, CSV, or JSON file.

Wrap-up

Python is widely-accepted to scrape data among developers. Tools like Scrapy and Python libraries like Beautiful Soup are useful in data extraction. They are powerful but not beginner-friendly in most situations. By contrast, Octoparse doesn’t require any code but still has powerful functionality for data scraping.