Amazon has massive product data ranging from pricing and availability to product details and customer reviews. Access to this rich information could help businesses gain a competitive edge.

That’s why Amazon data scraping has become an increasingly common practice for online shop owners to gather useful information from competitors and customers.

However, scraping data from large platforms like Amazon comes with some legal and technical challenges. Amazon also applies diverse anti-scraping measures to monitor and block IP addresses engaged in web scraping.

In this article, we’ll discuss whether it is legal to scrape Amazon data or not, as well as the anti-scraping measures Amazon uses and tips to avoid getting blocked by Amazon while scraping Amazon data.

Is It Legal to Scrape Amazon Data

There is no definitive legal answer on whether scraping Amazon data is legal or not. Many factors can influence the legality of scraping Amazon data. Its legality depends on what data you scrape, how you scrape, how you use the scraped data, Amazon’s Terms of Use, etc.

What Data Do You Scrape

Generally, scraping public product information that represents the lowest-risk category for Amazon web scraping activities, such as titles, descriptions, prices, ratings, etc., is legal, whereas scraping private account data will raise privacy concerns.

In addition, scraping reviews or other user-generated content might raise additional copyright issues. Login-protected content or personal user data represents the highest-risk category for Amazon scraping activities. This encompasses personal account details, purchase history, wish lists, and any information requiring user credentials to access

You can learn about how to scrape Amazon product data and build a price tracker with an easy-to-use web scraper if you need.

How Do You Scrape Data

Using automated bots or scripts to pull large amounts of data rapidly can strain Amazon’s servers and may be seen as a violation of their Terms of Services.

To avoid such a situation and be legally defensible, the ideal strategy to scrape Amazon data is minimizing load and throttling scrape requests.

Ethical scraping amazon practices include implementing appropriate delays between requests, using reasonable request frequencies, and avoiding patterns that could degrade Amazon’s service quality for legitimate users. Such practices show good faith efforts to minimize impact while collecting necessary data.

How to Use the Scraped Amazon Data

Many platforms state in their terms of use that platform data cannot be used for commercial purposes. Don’t worry, using the data for market research, sentiment analysis, competitor analysis, etc., is more likely to be considered a “fair use”.

The purpose and handling of scraped data significantly impact both the legality of web scraping and potential liability exposure.

Using scraped amazon product data for competitive analysis, price monitoring, or research may be viewed differently than using it to create competing services or violate intellectual property rights.

Amazon’s Terms of Service: The First Legal Barrier

Amazon’s Terms of Service explicitly prohibit automated data collection through scraping Amazon activities. While violating these terms is typically a civil matter rather than criminal activity, it can result in account termination, IP blocking, and potential legal action.

Applicable laws

Laws regarding web scraping, data ownership, and copyright vary by jurisdiction. Thus, it is important to learn about applicable laws and similar cases to understand the guidelines on the scraping and usage of data.

Court decisions have increasingly clarified when is scraping illegal and when it falls within acceptable bounds. The Electronic Frontier Foundation argues that “scraping is just automated access, and everyone does it” and that automated data collection from public websites should generally be protected when it doesn’t circumvent technological barriers or access controls.

Legal experts also note that scraping may qualify as fair use when used for analytical purposes, indexing, or creating new knowledge about datasets.

Based on legal precedents, scraping amazon product data that’s publicly visible (prices, descriptions, reviews) carries different legal risks than accessing login-protected information or user account data.

How Amazon Protects Against Web Scraping

Even though there is no clear guidance about scraping Amazon data from laws or the courts, Amazon has taken a cautious approach and restricted web scraping on its sites.

It has implemented various technical measures to detect and prevent unauthorized web scraping on its websites, which leads to some problems you might face while extracting data.

Amazon employs advanced detection algorithms that monitor request patterns, user agent strings, session behaviors, and access frequencies to identify potential scraping amazon activities.

When detected, these systems can implement immediate countermeasures including temporary or permanent IP blocking, account termination, and legal notifications.

CAPTCHA challenges

On most websites, the CAPTCHA challenge serves as a simple yet effective “Turing test”. As well as distinguishing humans from bots, it can also help reduce load and conserve server resources.

Amazon would sometimes present CAPTCHA challenges to detect if a request is coming from an automated bot rather than a human. Scrapers who can not solve CAPCHAS will be blocked and not likely to collect product data on Amazon.

Tips to solve Amazon CAPTCHA while scraping.

Rate limiting

Rate limiting is a technique to control the number of requests that a website or API allows from a single client, such as an IP address or user account.

Amazon might employ rate limiting at the IP address level by monitoring the number of requests coming from individual IP addresses and blocking IPs that make an abnormal volume of requests, or at the user level by rate limiting the number of API calls or page views associated with individual user accounts.

IP Address Blocking and the Role of Amazon’s Robots.txt File

Blocking IP addresses is used as a last resort by Amazon. It may choose to permanently block the IP addresses of scrapers that persist after measures.

Besides these measures, there are other techniques like robot.txt file and browser fingerprinting that are used for anti-web-scraping.

On the ground, Amazon might combine some of them to improve the efficiency in testing and blocking bots, which makes scraping Amazon data more challenging for most people.

Use Amazon API to Scrape Data

Amazon official APIs are one of the recommended tools to gather and interact with Amazon data with low risk in legal issues. Amazon has a suite of APIs for developers to access its products and services.

If you are familiar with coding or webpage development, you can consider applying APIs like Product Advertising API and Product Search API to your business.

You can build a Product Advertising API application to access a lot of the data used by Amazon, including items, customer reviews, seller reviews, etc., and most of the functionality on Amazon, such as finding products.

With this API, you can take advantage of Amazon data and realize financial gains. More importantly, Product Advertising API is free.

Product Search API is another application that can get data about products available to Amazon Business customers. The information it can access includes the product title, the merchant selling the product, and the current price.

Using Amazon APIs is a safe way to avoid getting blocked. But it requires coding knowledge and skills. For people who have zero skills in programming, no-code web scraping tools are more acceptable and easier to use.

There are many tools that have upgraded their features to avoid being blocked. I’ll explain it further in the next part.

How to Avoid Getting Blocked When Scraping Amazon

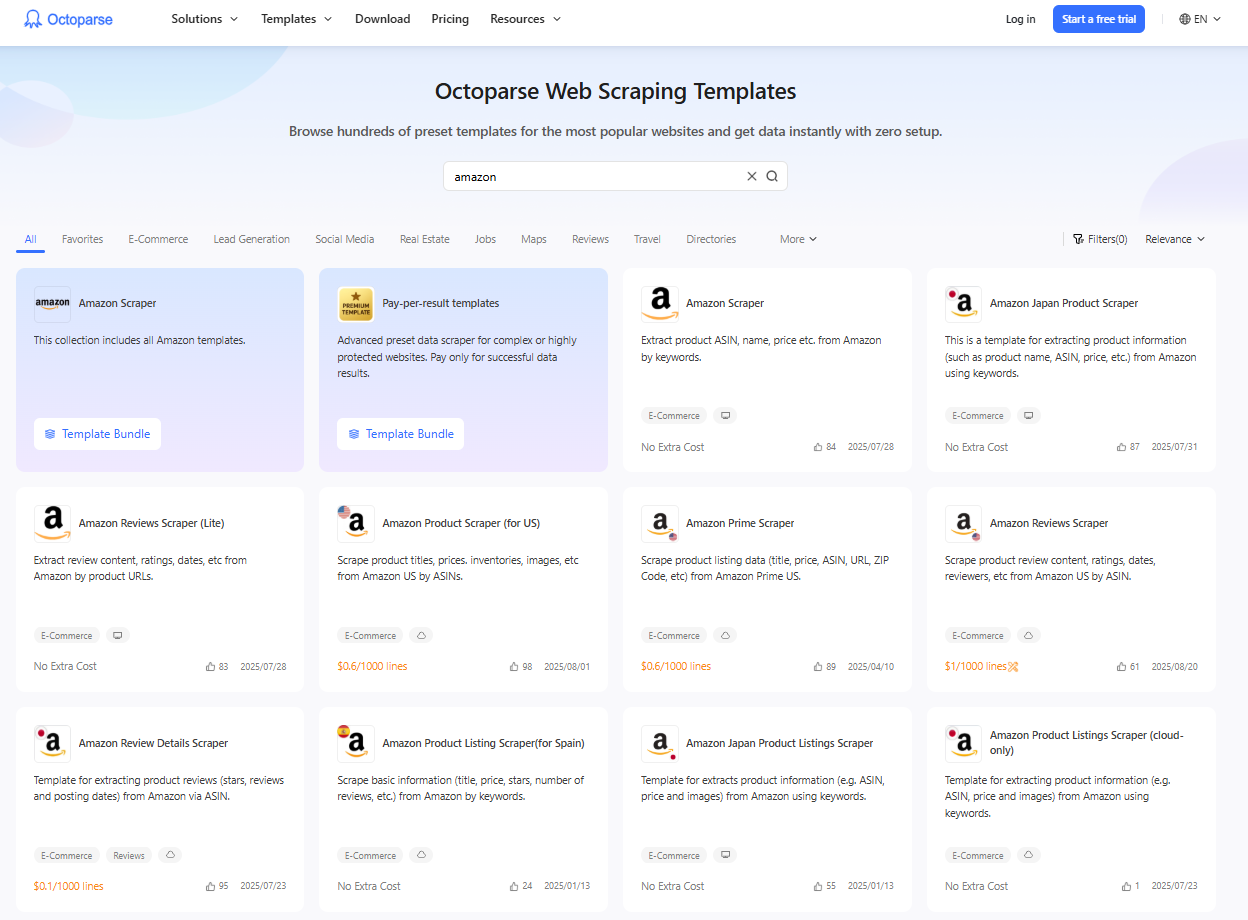

As mentioned above, Amazon applied diverse techniques to anti-scraping. A web scraping tool aims to improve the efficiency of Amazon scraping, but it needs to handle these problems. Taking Octoparse as an example, it is a no-code web scraping tool for anyone to build an Amazon scraper without getting blocked by Amazon.

Turn website data into structured Excel, CSV, Google Sheets, and your database directly.

Scrape data easily with auto-detecting functions, no coding skills are required.

Preset scraping templates for hot websites to get data in clicks.

Never get blocked with IP proxies and advanced API.

Cloud service to schedule data scraping at any time you want.

For non-coders, you can also choose the Amazon scraping templates provided by Octoparse, product listings, reviews, prices, and other data are all included.

With these templates, you can avoid the anti-scraping problems and extract data within a few clicks. Visit Octoparse online scraping templates, or search the keyword Amazon after you have installed Octoparse on your device.

Resolve CAPTCHA

Modern CAPTCHAs fall into four main categories, including text-based, image-based, audio-based, and no CAPTCHA reCAPTCHA. Octoparse can currently handle some CAPTCHA automatically: reCAPTCHA v2, and Image CAPTCHA.

reCAPTCHA v2 asks users to select “I am human” or “I’m not a robot.” It also answers some simple questions while visiting the platforms. When you build a scraper with Octoparse, you can add a step “Solve CAPTCHA” in the workflow and select reCAPTCHA v2 as the CAPTCHA type. Then, Octoparse will handle the CAPTCHA and scrape data without interruption after the scraper launches.

Compared with reCAPTCHA v2, solving Image CAPTCHA is a bit complicated because it can use known words or phrases or random combinations of digits and letters. There is not a particular and consistent solution to solve this kind of CAPTCHA. It uses a solving failure way to train the scraper to solve this kind of CAPTCHAs in Octoparse.

Use IP rotation

Amazon applies high-security measures to recognize and block web scrapers. If you have not done web scraping responsibly on Amazon, your IP addresses might be blocked, leading to failure to collect information. To reduce the chance of being blocked, you can use the anti-blocking solutions on Octoparse to modify your Amazon scrapers.

For instance, you can set up IP proxies manually in Octoparse. Octoparse does provide residential IPs that can work better in avoiding being blocked, or you can set up IP proxies to access your own IP into Octoparse. Both methods can help your scrapers escape the anti-scraping techniques to some extent.

Besides CAPTCHA and IP blocking, you may also encounter other anti-scraping techniques depending on the situation. You can try the user agent settings and auto-clear cookies features on Octoparse to optimize the sustainability of your scrapers.

Conclusion

There are many legal considerations about scraping Amazon data and using the data. Amazon’s anti-scraping techniques also make scraping Amazon data more challenging. To ensure the legitimacy and sustainability of Amazon scrapers, you should take all these factors into account.

Amazon’s official APIs can be a good choice, while building an Amazon scraper with Octoparse is effortless. It also provides anti-blocking solutions that anyone can use with zero coding skills to make an effective and sustainable Amazon scraper. Try Octoparse now. More solutions for web scraping are here for you.

FAQs About Amazon Scraping Legality

- What specific parts of Amazon’s data can I legally scrape?

Publicly accessible product information such as titles, prices, descriptions, and reviews present lower legal risks compared to protected content, though all amazon scraping activities potentially violate terms of service. The key distinction lies between data available to anonymous visitors versus content requiring authentication or containing personal information.

- How does Amazon’s robots.txt influence scraping restrictions?

Amazon’s robots.txt file serves as a formal declaration of the company’s preferences regarding automated access, and while not legally binding, it provides clear evidence of Amazon’s stance against unauthorized scraping amazon activities. Violating robots.txt directives can strengthen Amazon’s legal position if disputes arise and may trigger more aggressive anti-scraping measures.

- Can I scrape Amazon if I avoid login-protected content?

Limiting scraping amazon activities to public data reduces certain legal risks, particularly under the Computer Fraud and Abuse Act, but doesn’t eliminate potential civil liability for terms of service violations. Amazon’s policies prohibit automated data collection regardless of data accessibility, meaning even public data scraping violates contractual terms.

- What are the legal risks of violating Amazon’s terms of service?

Violating Amazon’s terms of service primarily creates civil liability exposure, including potential lawsuits for breach of contract, damages claims for harm to Amazon’s business interests, and injunctive relief to stop scraping activities.

Additional consequences may include account termination, IP blocking, and legal costs associated with defending against Amazon’s enforcement actions.

- How can I ensure my scraping practices are ethical and compliant?

Developing ethical scraping amazon practices requires implementing technical safeguards (reasonable request rates, proper user agents), legal safeguards (attorney consultation, compliance documentation), and exploring official alternatives like Amazon API options before resorting to web scraping. Prioritize transparency in data usage, minimize server impact, and maintain clear business justifications for data collection activities.

Still curious about web scraping? Here are more resources you may find useful:

- Is Web Scraping Legal in Some Countries?

- Is Scraping Yelp Legal?

- Is Web Crawling Legal? Well, It Depends

- Use Proxies to Bypass CAPTCHA

- How to Solve CAPTCHA While Web Scraping

- How to Bypass Cloudflare CAPTCHA

- How to Bypass reCAPTCHA for Web Scraping

- Proxy Server for Web Scraping

- How Do Proxies Prevent IP Bans in Web Scraping

- Best Web Scraping Proxy Service Providers