With more and more information available on the website, it is nearly impossible for individuals to find what they need without some help sorting through the data. Google ranking systems are designed to complete the task, which is sorting through hundreds of billions of web pages in the search index to find the most relevant, useful results in a fraction of a second, and presenting them in a way that helps you find what you are looking for. These ranking systems are made up of not one, but a whole series of algorithms.

To give you the most useful information, search algorithms look at many factors, including the words of your query, the relevance, and usability of pages, the expertise of sources, and your location and settings. The weight applied to each factor varies depending on the nature of your query. SEO (Search Engine Optimization) is the process of affecting the visibility of a website or a web page in a web search engine’s unpaid results. In fact, this is the free way to improve your Google ranking and attract more traffic.

SEO & Google Ranking Improvement

A study by Infront Webworks showed that the first page of Google receives 95% of web traffic, with subsequent pages receiving 5% or less of total traffic. So for most people, especially those who want to start their business with limited funds, SEO (Search Engine Optimization) is a good way to improve Google ranking to display their websites and attract more people to the websites at a relatively little cost.

Contrary to what some ‘experts’ would have you believe, SEO does not have cut-and-dry rules. There is no plug-and-play method to SEO success. This is mostly because search engines are always updating their algorithms. And with every new algorithm update comes new guidelines to follow if you want to rank high.

SEO is a big thing with many factors that would aspect Google ranking, like:

- On-page factors: keyword in the title tag, keyword in H1 tag, description, length of content, etc.

- Site factors: sitemap, domain trust, server location, etc.

- Off-page factors: the number of linking domains, domain authority of linking page, the authority of linking domain, etc.

- Domain factors: domain registration length, domain history, etc.

Most of these factors could be researched with web scraping tools in a free way (refer to Top 30 Free Web Scraping Software for more information). And with enough information, you could develop a better strategy to improve your Google ranking.

So in this post, I would only focus on keyword research, backlink research, and LCP improvement to show you how to identify projected traffic and ultimately how to determine the value of that ranking in a free and easy way if you don’t have any ideas.

Keyword Research

Keywords are the specific words or phrases that someone enters into a search box on a site like Google to find information.

To help your business show up higher in the search results, it is important to research and discover what your customers and prospects are searching for and then create content that targets those terms.

I bet you would say, “Oh, it’s easy. You know, there are plenty of keyword research tools, Keyword Planner and Buzzsumo, for example. They all could help me find the most valuable keywords to target with SEO.”

Yes, it is right. But how could you judge the value of keywords? How do you know that you get the right kind of visitors?

The answer is to research your market’s keyword demand, predict shifts in demand, and produce content that web searchers are actively seeking. The tools mentioned above would only show us the keywords that visitors often type into search engines. However, they cannot show us directly how valuable it is to receive traffic from those searches. To understand the value of a keyword, we need to understand our own websites, make some hypotheses, test, and repeat—the classic web marketing formula. Here I would show you how it works.

For example, suppose you have chosen some targeted keywords and produced some content before, now you need to measure the effects. That’s to say, when searchers use these relevant keywords searching on Google, they would find your website and come to it. That’s why you need to know your ranking first.

Right out of building a strategy, you need data on keywords, competitor rankings, consumer content preferences, link-building data, etc. When you start to implement your strategy, you need to collect data to measure how well your efforts to use SEO for growth are yielding results. And if they aren’t working, you need data to find out why.

I will take Octoparse for example to illustrate that.

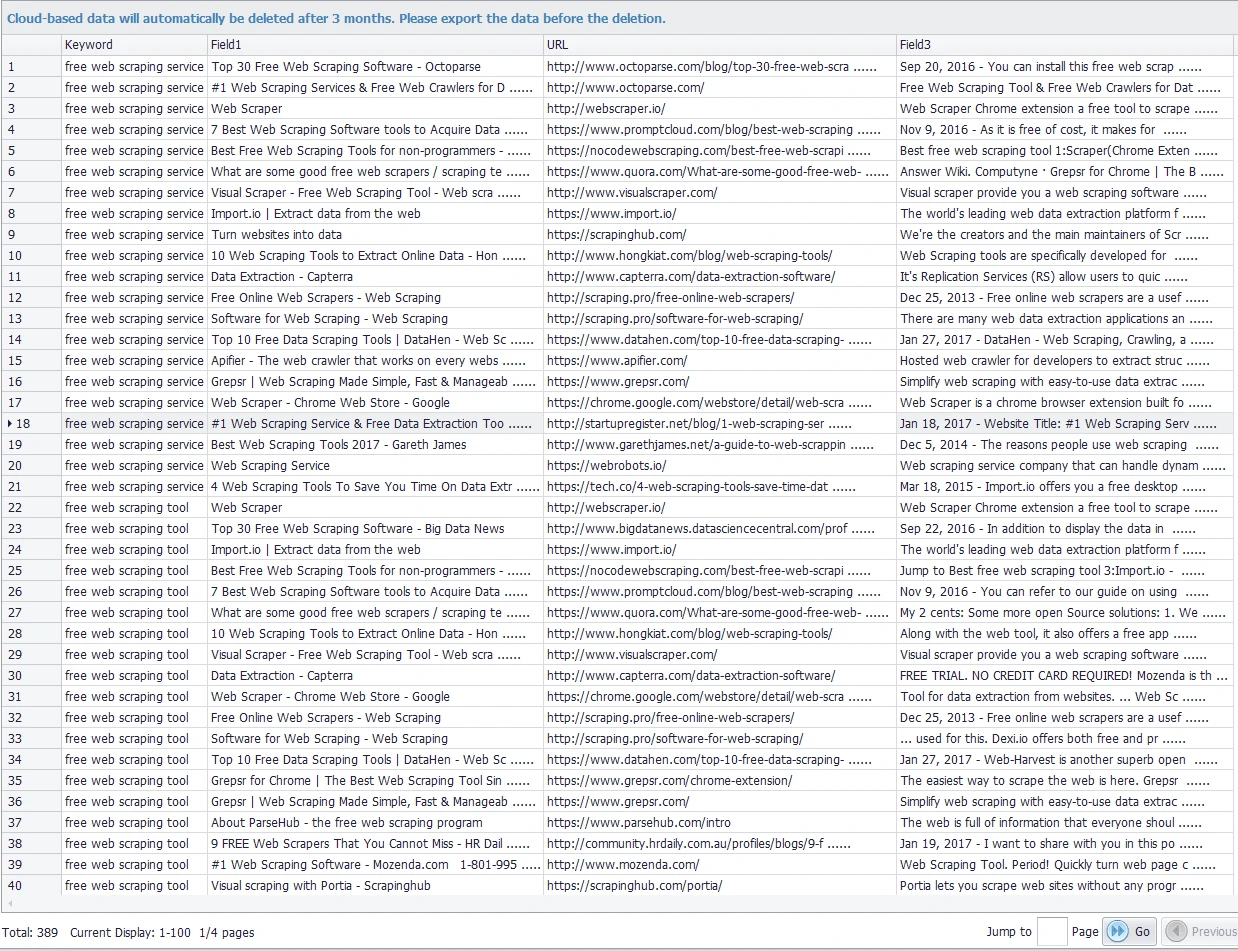

How could I know the ranking of the Octoparse domain when searching the two relevant keywords “free web scraping tool” and “free web scraping service”? And how could I know the other detailed information ranking before Octoparse so that I could know better about the value of the searched keywords?

The answer is the web scraping tool. One of the major benefits of web scraping is that it allows you to collect data in large volumes. While the data of keywords count as small data, it is still large enough to cause you quite some headaches if you choose to collect it manually.

With the web scraping tool Octoparse, you could easily scrape the information you want by searching the keywords. It also allows you to collect data in real time. Real-time data is essential to get the fastest keyword ranking results. Datasets like the number of click throughs and volume of organic traffic tell you if you are getting noticed on search engines or not. And these datasets are continually changing. Octoparse makes it possible for you to keep up with such fluctuating datasets.

Below is the result I got with Octoparse Cloud Service.

I export the extracted data to Excel and analyze the data. Sadly, I didn’t find Octoparse’s domain in Excel though I find most visitors come to my website by searching these two keywords by analyzing information from Google Search Console. That’s the problem that most people would encounter, but they would often ignore it without realizing it. Therefore, checking your ranking frequently and adjusting strategies accordingly is necessary if you want to improve your Google ranking.

For example, for me, I need to check the domain of these websites and try to figure out whether their Page Rank is higher than mine. If yes, could my content be of higher quality? If not, what other factors could be optimized to improve the ranking?

This is a simple example that shows you that using a web scraping tool for SEO could give you valuable insight into how hard it would be to rank for the given keyword, and also the competition.

Backlink Research

Another way to improve your search engine optimization is to create backlinks to your site. Imagine Google as the Internet’s polling station, counting the votes from all the links that it finds on the web. Unlike in your typical democracy, where one person has one vote, Google gives more weight for votes from authoritative, relevant websites. Therefore, the biggest factor in determining Google’s rankings tends to be based on those little blue links that you see on almost all websites.

How do you create backlinks? Well, it is easy. Firstly, you have to be in forums where they talk about your niche. When people ask questions regarding your industry, you respond to the problems with solutions and subtly include your link to a relevant article. After explaining your answer, you can add something like “you can more where I talk about it here: XYZ.xyz” in the same comment.

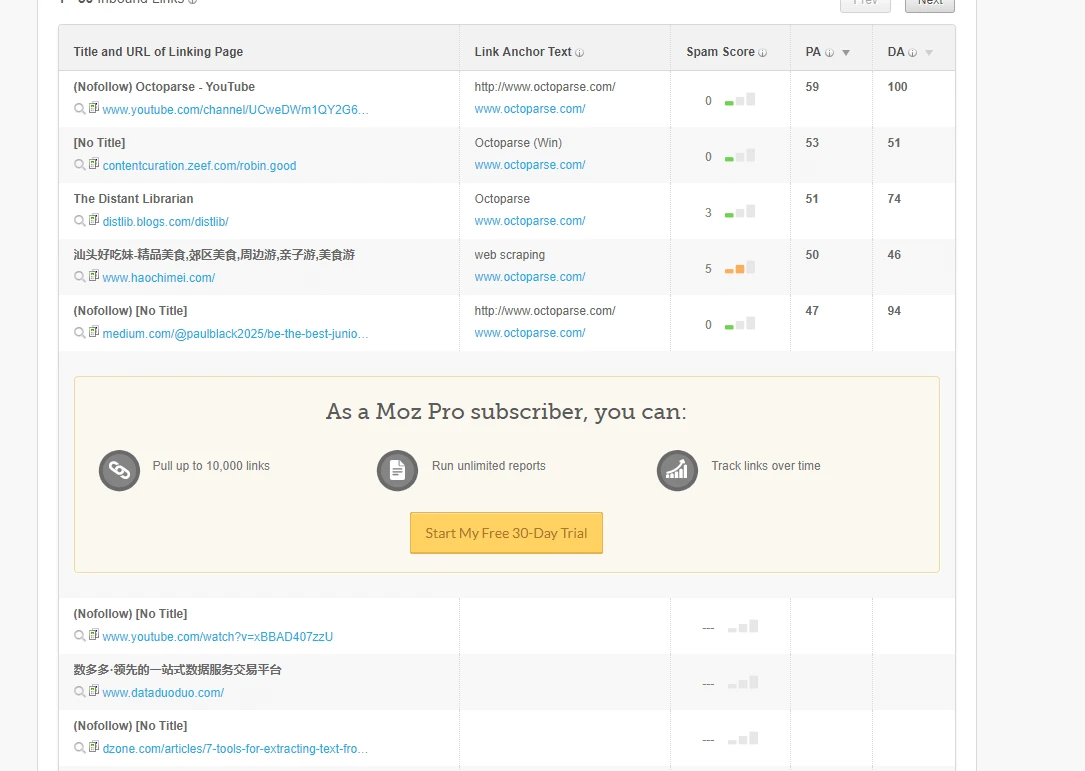

So how could you find out these blue links? The most common way is to search competitors’ backlinks via SEO tools like Open Site Explorer. See the backlinks of Octoparse I find in Open Site Explorer below.

But the problem is how could I get this information without upgrading my account to a premium one? The answer is using a free web scraping tool! By utilizing web scraping, you can discover all the forums in which they are discussing topics related to your field. Just make a simple crawler, all the information displayed online could be extracted without any cost. Then you would have the idea of reaching out to the right sources and offering value in exchange for a solid link.

LCP Improvement

Largest Contentful Paint (LCP) is a Google user experience metric introduced back in 2021 as a part of the Page Experience Update. LCP is one of the three Core Web Vital metrics, measuring how long it takes for the largest element to become visible in the viewport. Until the LCP doesn’t load, you won’t see almost anything on the page. It’s because the LCP element is always above the fold — that is at the top of the page.

The types of elements considered for LCP are usually an image, a text block, a video, or an animation. Think about it: if the page’s biggest element doesn’t load fast, the user experience won’t be good. The visitors are likely to leave the site after waiting for the blank page to load for several seconds, which can result in higher bounce rates. Therefore, measuring and improving the LCP score is important for SEO.

A good score for LCP should be less or equal to 2.5 seconds. There are various tools designed for you to diagnose the largest element on your page and measure its performance. For example, PageSpeed Insight, WebPageTest, GTmetrix, etc. You can use the tools to test the current LCP score of webpages, optimize the content if necessary, and then compare the LCP score of the optimized webpages with the original score.

There are tons of ways to improve website ranking. And in my personal experience, using web scraping tools is one of the most advanced ways to do that with little cost. Just have a try!