Web crawlers tend to crawl a larger amount of pages and content as long as they are new and updated for Search Engines such as Google. Their mission focuses on the action “search”. While web scrapers are usually tools used to not only search desired information, but most importantly scrape them off and output them in structured data for later analysis.

| Web Crawling | Web Scraping | |

| Purpose | For visibility on Search Engine | Data Analysis in all industry |

| Mission | Search for new pages and updated content | Find desired content from specific url(s) and scrape them off |

| Application | SEO | Marketing, finance, lead, life, education, social media, consulting… |

Though web crawler is mainly related to SEO, it is sometimes interchangeable to mean web scraper. If you are not sure, use web scraper if you want to find tools to simplify your data extraction.

Having outlined the fundamental distinctions between web crawling and web scraping, let’s dive deeper into the principles and application of each process. This will help us understand not only how they differ in functionality and purpose but also how they are applied in various fields.

At the end of the article, you will be able to understand:

1. Basic differences between web scraping vs web crawling

2. Techniques of both approaches and how they are different

3. Why do you need both techniques in real-world projects

4. How modern tools simplify the process end-to-end without worrying about implementation

Web Crawler And Its SEO Significance

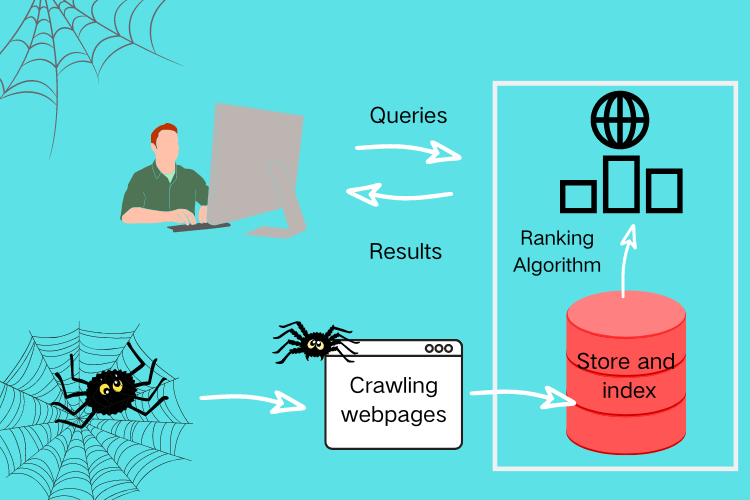

Web crawler, or spider, is a bot used by search engines to find new web pages or updated content in a known/indexed web page. It is highly important in the field of SEO as if your pages are not crawled by these crawlers, they cannot be indexed by search engines like Google. And there is no way audience can see your page even if they search a whole paragraph copied from your original text!

How Does A Web Crawler Work?

From a technical perspective, web crawlers start with seed URLs—initial web pages that serve as entry points for the discovery process.

Once the crawler fetches a page’s HTML content, it parses through the code to identify all hyperlinks referenced on that page. These newly discovered URLs are then added to a queue for processing. This iterative discovery process continues, building connections between pages like a spider weaving its web.

It’s important to note that responsible crawlers always respect the robots.txt file—a set of rules that website owners use to specify which pages should or shouldn’t be crawled. Search engine crawlers strictly adhere to these guidelines to maintain ethical crawling practices.

If the above is a bit difficult for you, think of the web crawler as a librarian. And the world wide web is the library where millions of books are stored. Here books are referred to web pages. There are new books coming in every day just like there are new web pages being created every minute. And the job of librarians, is to find those new books and categorize them by finding out what they are about, like book name, author, description, content, etc. And what web crawlers need to do is to find new pages and download their content. After that, the crawled pages will be stored waiting for indexing according to the category they fall into.

Example of a Web Crawler in SEO

Not only can search engines use crawlers to crawl pages, we can also use some website crawler tools to improve our SEO ranking visibility and achieve potentially higher conversions.

These tools are invaluable for identifying common SEO issues such as broken links, duplicate content, and missing page titles, which are crucial for optimizing your site. With a variety of web crawler tools available, it’s possible to select one that best fits your needs. These tools not only crawl data from website URLs but also help in restructuring your website to make it more comprehensible to search engines, thus improving your site’s ranking.

Below are some of top web crawlers. Each known for its unique features and capabilities:

Semrush

One powerful tool is Semrush, which goes beyond basic crawling functionalities to provide a comprehensive analysis of page structure, helping to identify technical SEO issues and enhance search performance. Semrush supports a wide range of SEO tasks, including keyword research, competitive analysis, and much more. It’s especially valuable for those who need in-depth insights into their SEO strategy and the competitive landscape.

Screaming Frog

Another prominent example of a web crawler tool is Screaming Frog. This tool is designed to crawl website URLs to help webmasters analyze and audit their site’s SEO in real-time. It can quickly identify broken links, analyze page titles and metadata, and even generate XML sitemaps. This kind of comprehensive crawling capability is essential for maintaining an up-to-date, SEO-friendly website.

By integrating these tools into your SEO strategy, you can ensure your website remains visible and competitive in the increasingly crowded digital landscape.

How Does A Web Scraper Work?

All our web pages are structured in HTML, and what web scrapers do is parse the HTML in the page and locate the designated area that stores your desired data. Think of HTML as a hierarchical document with nested elements—like boxes within boxes.

The scraping process involves several key steps:

1. Fetching the Page: The scraper first retrieves the HTML content of the target webpage.

2. Parsing the Structure: It then analyzes the HTML structure to understand how data is organized. Scrapers use specific techniques to identify elements:

- CSS Selectors: These target elements based on their class names, IDs, or tags (e.g., finding all products with class=”product-price”)

- XPath: A more powerful method that navigates through the HTML tree structure to pinpoint exact locations of data

3. Extracting Data: Once the scraper locates the designated area containing your desired information, it extracts the values—whether that’s product prices, article titles, contact information, or images.

4. Structuring Output: Finally, the extracted data is transformed into your preferred format—typically CSV files, Excel spreadsheets, or JSON for easy analysis.

Most importantly, this automated process can be set to repeat at an interval, so you can gather fresh data continuously without manual intervention. Web scrapers like Octoparse use AI-powered intelligent parsing that can automatically recognize data patterns, which means the extraction process is 10 times more efficient and adaptable to website changes.

TL;DR

If a web crawler extracts data from a large database like the world wide web (WWW) then web scraper focuses on a much smaller area, such as, a specific website(url), or a batch of websites. The purpose of web scraper is for personal or business usage.

Helpful Resources:

There are also many other techniques from “ieeexplore” you can check out about web data extraction.

Why Do I Need A Web Scraper?

Today we are all swamped by seemingly countless information. And data analysis (including cleaning and processing) becomes ever more imperative to the point that even a bit of information can decide a company’s fate. In such cases, how to gather information quickly and efficiently for market research becomes a skill one needs to survive in this information competing era.

Web scraper, usually a pragram written by python or a software, can help us extract desired unstructured data from designated areas of a webpage, and then transform them into organized or predefined struture in excel or CSV.

For example, as an ecommerce shop owner, price monitoring is essential to keep one’s own price edge. However, copy and paste prices into your database from different shops is time consuming and makes people grumpy. Not to mention there will be duplicate content!

Using a web scraper can beat the headache. You can write the code script and the scraper will help you do the job. No knowledge of programming knowledge? Check Octoparse, a free no-coding solution!

How Many Types of Scrapers Are There?

There are two types of scrapers. One is self-built, written in code, usually python. The other is pre-built scraper such as software, or browser extension.

Writing your own code is time-consuming and it poses a hurdle for those no-coders. While there are software scrapers that help you with python libraries, no-coding-required softwares present a much easier solution.

No-coding Software

Take Octoparse for example.

It simulates the process of human beings extracting data in the page by pointing and clicking. All you need is just a targeted url, and Octoparse will auto-detect the data area for you to choose from. Of course, you can choose yourself within a few simple clicks. Then an automated workflow will be created for you signaling the sequence of the steps one needs to take to copy and paste data. Simple, right?

Software scrapers also have more advanced options than self-built scrapers and extensions. For example, Cloud Run feature. This is the greatest thing that has happened to SME who focuses on efficiency. Cloud run means all your scraping projects can be run on the cloud servers instead of your local server. And meanwhile, your local server can focus on other tasks.

APIs

For those who don’t want to spend extra time on building and testing, APIs(application programming interfaces)will be a better option. Now many large websites such as Google, hubspot, Facebook, ChatGPT, offer APIs that allow you to access their data in a structured format. And normally API will be more stable in data collection as it is consumed by programs rather than by human eyes. Even if the front end presentation of a website changes, its API structure remains unchanged, which makes API a more reliable source of data.

If you are not sure about which web scrapers to start you data pipeline, check out our recommendation on “Best Free Web Scraper” that we tested.

How Web Scraping and Web Crawling Work Together

The best data pipelines use both scraping and crawling in combination to achieve the best results so I want to show you how they work together.

Scraping starts after crawling is finished, meaning that the exploration process is done, and now it is up to scrapers (miners) to extract this data from the locations already provided by crawlers. Each of the processes plays a special role and is crucial in gathering high-quality data.

I will give you an example of the price monitoring tool I built using the combination of these techniques for a company that wanted to observe the competitors. When starting such a project I begin with a list of seed URLs for the crawling process, which would regularly check other retain’s sitemaps and following links to discover all products in their catalogue.

When starting the extraction it was easier as discovery did a lot of work already by preparing the products and all the location of the data I need, like prices, names and descriptions. Regular scraping is also part of it as there are always new reviews coming in and we want constant flow of data.

By doing this I achieved giving insights into workflows of competitors and giving me the advantage to see where the market is heading.

How Web Scraper Fuels Your Business?

- Price Monitoring

Price competitiveness accounts for a large part in product sale and that’s why price monitoring becomes essential for ecommence shops to gain an edge in this cut-throat competition. Instead of copying and pasting prices of different products from different sources, shop owners can now use scraping tools to get that information not just within minutes but also avoid wrong or duplicate content caused by human eyes! With the data at hand, shop owners can also adjust inventory based on market trends such as demand and supply and avoid possible risk of unnecessary product hoarding.

The Internet is growing at exponential speed and there is no doubt these vast amount of information, over 1.9 billion websites, which will still be growing, contain as many valueable insights as our brain can reach. For any start-ups who want to enter a new business or industry, existing product offerings, specs and marketing share will help them notice a wiser niche to explore. And for those growing companies, consumer behavior and preferences analysis make sure that their products always align with the market needs.

Lead collection will be a time-consuming headache for B2B companies. Especially when the sources are scattered in different platforms. With scraping tools, even companies that don’t have developers can fasten this gathering process and focus more on what they actually excel at.

With scraper, real estate agents can compare the price of properties across different platforms and even build from scratch a comprehensive database of properties listing. Buyers can also gain knowledge of a property’s neighborhood by extracting information about schools, facilities, crime rates and hospitals before taking an action. More details about the property like floors, parking spaces and room size can also be collected. For investors, they can make a wiser investment based on the evaluation of past data on price change and purchase trends.

Essay writing normally entails large amounts of data for experiment or context analysis. And web scrapers can help students collect statistical data online to support trend analysis and effectiveness of certain practices. Except for past data, scholars are also able to track and gather real-time data to ensure data freshness by setting a project that runs periodically.

For businesses where current events significantly impact operations, web scraping provides a means to monitor news outlets and social media platforms continuously. This real-time information can be vital for crisis management, public relations, and staying informed about industry developments. Financial institutions, for example, may use web scraping to track news that could affect stock prices or market conditions.

Web scraping is instrumental in sentiment analysis, where companies assess public opinion about their brand, products, or services through data collected from social media and review sites. This analysis helps in product improvement, customer service enhancement, and effective marketing. For example, a tech company might scrape online forums and tech blogs to gauge consumer reactions to a new product launch.

These examples underscore the importance of web scraping in providing actionable insights and supporting strategic decisions across various sectors. Yet there are still many other possibilities for you to explore.

To Sum Up

You can choose what suits you at the present moment, but do check the terms of service of the website you are going to scrape before action to avoid any rule violation.

Tips for Action

Beyond the point-and-click simplicity, Octoparse leverages AI technology to make scraping even more efficient. Its intelligent parsing feature can automatically recognize data patterns and adapt when dynamic website structures change. The platform’s adaptive crawling capability means your scrapers can handle hundreds of pages across complex website architectures without breaking.

When combined with built-in proxy rotation and CAPTCHA bypass features, Octoparse addresses the most common blocking issues that would otherwise require extensive programming knowledge to solve.

If you are a no-coder or developer who wants to save some effort, no coding software is your perfect go-to solution. It can save you the headache of script writing and do all the necessary jobs for you, such as IP rotation, ready-to-use template, duplicate content removed, cloud run, etc. Check out Octoparse for all the features mentioned above by starting a 14 days free trial with us!

Turn website data into structured Excel, CSV, Google Sheets, and your database directly.

Scrape data easily with auto-detecting functions, no coding skills are required.

Preset scraping templates for hot websites to get data in clicks.

Never get blocked with IP proxies and advanced API.

Cloud service to schedule data scraping at any time you want.

FAQs

1. What tools should I use for web scraping vs web crawling?

For web crawling focused tasks I use dedicated crawlers like Scrapy or Nutch (Apache) that work well for developers. Scraping is usually performed by tools like Beautiful Soup or Selenium that handle such data extraction methods. However using modern platforms for their integrations like Octoparse can provide both capabilities in one tool that simplifies the whole process.

2. Is web scraping legal? Is web crawling legal?

When respecting the rules of the websites I am scraping or crawling, both techniques are generally legal. The rules I need to respect are in regards to sensitive and personal data which is very specific how it is scraped and what is done with it afterwards. Also when dealing with large amounts of data I should respect the server loads of the target websites and not overload them.

On more information refer to a extended research here.

3. Do I need to know programming to scrape or crawl websites?

If you choose the path of standard tools like Scrapy or Selenium then yes, programming is required in a languages like Python, but going with Octoparse makes this option a no-code alternative with point-and-click interfaces.

4. What’s the difference between web scraping and using an API?

Through the API you can only obtain data that is limited by the developers of a certain website, while with scraping you could access data that is also available from the pages of the whole website.

Both approaches in combination provide a full palette of data that is later used for processing and analysis.

Lastly API is documented and almost always legal approach, that is monitored by both sides making it a safer option when planning to use this data commercially.

5. Can I scrape data from Google, Facebook, or other major platforms?

The biggest platforms and sources of information are usually very strict about scraping directly from their websites.

It can be possible to do so from a technical perspective like in cases of Google maps or Amazon prices, but you are limited in using this data later because of legal limitations. Using an API in such cases is usually a better alternative where everything is documented.

On more about web scraping legality, check out this “Is Web Scraping Legal” guide.