Web scraping, also known as web harvesting and web data extraction, basically refers to collecting data from websites via the Hypertext Transfer Protocol (HTTP) or through web browsers.

Tips: Read What Is Web Scraping – Basics & Practical Uses to get a more comprehensive understanding of web scraping and its pros and cons.

In this guide I will present the timeline that traces its commercial rise, key milestones, and brands like Octoparse that made data extraction accessible to businesses worldwide.

How does web scraping work?

Technically, web scraping has always meant automating what a browser does: requesting pages, interpreting their structure, and extracting the relevant pieces into a dataset.

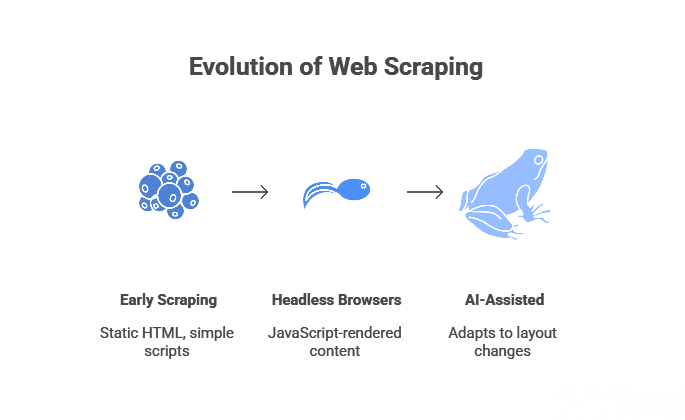

- Early web scraping: static HTML price lists parsed by simple scripts.

- Later: headless browsers handling JavaScript‑rendered content.

- Today: AI‑assisted scrapers that adapt to layout changes and anti‑bot defenses.

I know what you think — web scraping looks good on paper but actually more complex in practice. We need coding to get the data we want, which makes it the privilege of who’s a master of programming. As an alternative, there are web scraping tools automating web data extraction at the fingertips.

A web scraping tool will load the URLs given by the users and render the entire website. As a result, you can extract any web data with simple point-and-click and file in a feasible format into your computer without coding.

For example, you might want to extract posts and comments from Twitter. All you have to do is to paste the URL to the scraper, select desired posts and comments and execute.

It can save you tremendous time and effort from the mundane work of copy-and-paste.

How did web scraping all start?

Though to many people, it sounds like a brand-new concept, the history of web scraping can be dated back to the time when the World Wide Web was born.

In the very beginning, the Internet was even unsearchable. Before search engines were developed, the Internet was just a collection of File Transfer Protocol (FTP) sites in which users would navigate to find specific shared files. To find and organize distributed data available on the Internet, people created a specific automated program, known as the web crawler/bot today, to fetch all pages on the Internet and then copy all content into databases for indexing.

Then the Internet grows, eventually becoming the home to millions of web pages that contain a wealth of data in multiple forms, including texts, images, videos, and audio. It turns into an open data source.

As the data source became incredibly rich and easily searchable, people started to find it simple to seek the information they want, which often spread across a large number of websites, but the problem occurred when they wanted to get data from the Internet—not every website offered download options, and copying by hand was obviously tedious and inefficient.

And that’s where web scraping came in. Web scraping is actually powered by web bots/crawlers that function the same way those used in search engines. That is, fetch and copy. The only difference could be the scale. Web scraping focuses on extracting only specific data from certain websites whereas search engines often fetch most of the websites around the Internet.

What Does the Evolution of Web Scarping Looks Like:

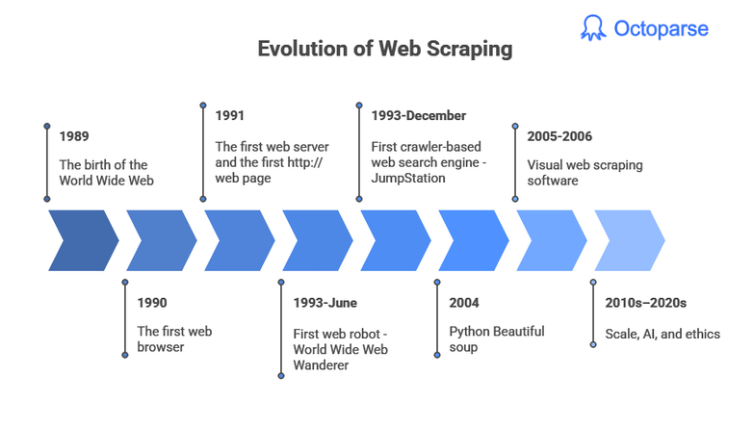

1989 The birth of the World Wide Web

Technically, the World Wide Web is different from the Internet. The former refers to the information space, while the latter is the network made up of computers.

Thanks to Tim Berners-Lee, the inventor of WWW, he brought the following 3 things that have long been part of our daily life:

Uniform Resource Locators (URLs) which we use to go to the website we want; embedded hyperlinks that permit us to navigate between the web pages, like the product detail pages on which/where we can find product specifications and lots of other things like “customers who bought this also bought”; web pages that contain not only texts but also images, audios, videos, and software components.

1990 The first web browser

Also invented by Tim Berners-Lee, it was called WorldWideWeb (no spaces), named after the WWW project. One year after the appearance of the web, people had a way to see it and interact with it.

1991 The first web server and the first http:// web page

The web kept growing at a rather mild speed. By 1994, the number of HTTP servers was over 200.

1993-June First web robot – World Wide Web Wanderer

Though functioned the same way web robots today do, it was intended only to measure the size of the web.

1993-December First crawler-based web search engine – JumpStation

As there were not so many websites available on the web, search engines at that time used to rely on their human website administrators to collect and edit the links into a particular format. JumpStation brought a new leap. It is the first WWW search engine that relies on a web robot.

Since then, people started to use these programmatic web crawlers to harvest and organize the Internet. From Infoseek, Altavista, and Excite, to Bing and Google today, the core of a search engine bot remains the same: find a web page, download (fetch) it, scrape all the information presented on the web page, and then add it to the search engine’s database.

As web pages are designed for human users, and not for ease of automated use, even with the development of the web bot, it was still hard for computer engineers and scientists to do web scraping, let alone normal people. So people have been dedicated to making web scraping more available. In 2000, Salesforce and eBay launched their own API, with which programmers were enabled to access and download some of the data available to the public. Since then, many websites offer web APIs for people to access their public databases. APIs offer developers a more friendly way to do web scraping, by just gathering data provided by websites.

2000s APIs, Libraries, and the First Commercial Tools

Salesforce and eBay launched APIs in 2000, offering structured data access and signaling the birth of “data products.” Programmers turned to Python’s Beautiful Soup (2004), a parsing library that simplified HTML extraction for developers building price trackers and lead generators.

2004 Python Beautiful soup

Not all websites offer APIs. Even if they do, they don’t provide all the data you want. So programmers were still working on developing an approach that could facilitate web scraping. In 2004, Beautiful Soup was released. It is a library designed for Python.

In computer programming, a library is a collection of script modules, like commonly used algorithms, that allow being used without rewriting, simplifying the programming process. With simple commands, Beautiful Soup makes sense of site structure and helps parse content from within the HTML container. It is considered the most sophisticated and advanced library for web scraping, and also one of the most common and popular approaches today.

2005-2006 Visual web scraping software

In 2006, Stefan Andresen and his Kapow Software (acquired by Kofax in 2013) launched Web Integration Platform version 6.0, something now understood as visual web scraping software, which allows users to simply highlight the content of a web page and structure that data into a usable excel file, or database.

Finally, there’s a way for the massive non-programmers to do web scraping on their own. Since then, web scraping is starting to hit the mainstream. Now for non-programmers, they can easily find more than 80 out-of-box data extraction software that provides visual processes.

2010s: No-Code SaaS and Data-as-a-Service Boom

Cloud computing fueled scale: AWS, Google Cloud, and Azure enabled distributed crawlers with proxy rotation via providers like Bright Data (formerly Luminati). No-code platforms exploded, turning scraping into SaaS.

Octoparse emerged around 2014, offering point-and-click templates for e-commerce, social media, and job boards, plus cloud runs and IP rotation—making it a go-to for marketers without dev teams.

2010s–2020s scale, AI, and ethics

In the 2010s, web scraping moved from single‑machine scripts to large distributed crawlers running on cloud platforms such as AWS, Google Cloud, and Azure.

These systems combined headless browsers like Headless Chrome with automation frameworks such as Selenium and Playwright to load JavaScript‑heavy pages and collect data for analytics, price monitoring, and, increasingly, machine learning and AI models.

At the same time, engineers began to use machine learning inside the scrapers themselves. Instead of relying only on fixed CSS selectors, teams trained models to recognize page layouts, detect product blocks or review sections, and automatically adapt when sites changed their HTML structure. This made tools and services from a growing ecosystem of “data‑as‑a‑service” and proxy providers more powerful, because they could maintain large‑scale crawlers with less manual rework.

This new scale triggered legal and ethical questions. Data protection laws such as the GDPR in the European Union and the CCPA in California, along with high‑profile disputes like hiQ Labs v. LinkedIn, pushed companies to think carefully about how they collect and use public web data. For modern organizations, web scraping is no longer just about writing a crawler; it also involves reviewing terms of service, respecting robots.txt and access controls, and setting clear policies for consent, retention, and responsible use of scraped data.

How will web scraping be?

We collect data, process data, and turn data into actionable insights. It’s proven that business giants like Microsoft and Amazon invest a lot of money in data collection about their consumers so as to target people with personalized ads. whereas, small businesses are muscled out of the marketing competition as they lack spare capital to collect data.

Thanks to web scraping tools, any individual, company, or organization are now able to access web data for analysis. When searching “web scraping” on guru.com, you can get 10,088 search results, which means more than 10,000 freelancers are offering web scraping services on the website.

The rising demands for web data by companies across the industry prosper the web scraping marketplace, and that brings new jobs and business opportunities.

Meanwhile, like any other emerging industry, web scraping brings legal concerns as well. The legal landscape surrounding the legitimacy of web scraping continues to evolve. Its legal status remains highly context-specific. For now, many of the most interesting legal questions emerging from this trend remain unanswered.

One way to get around the potential legal consequences of web scraping is to consult professional web scraping service providers. Octoparse stands as the best web scraping company that offers both scraping services and web data extraction tools. Both individual entrepreneurs and big companies will reap the benefits of their advanced scraping technology.