Did you know that the same product can have different prices depending on where you browse from? Many websites tailor content, pricing, or access based on your location, hiding certain deals or pages from foreign IPs. GEO-targeted proxies let you appear as though you’re browsing from another country or city — unlocking region-specific information.

In this post, we’ll explore what GEO-targeted proxies are, why they matter, and how to set them up (especially in Octoparse) for accurate, localized scraping.

What Are GEO-targeted Proxies

A GEO-targeted proxy is an intermediary server located in a specific geographic location that routes your web traffic through that region. So, if you connect via a GEO-targeted proxy in Germany, for instance, websites will recognize a German IP address and deliver content as if you were actually browsing from places like Berlin or Munich.

How GEO-targeted Proxies Work

The process is straightforward:

- You send a request to access a website through your scraping tool

- The proxy intercepts your request and forwards it from its server location

- The target website receives the request from the proxy’s IP address, not yours

- The website responds with location-specific content based on the proxy’s location

- The proxy sends that content back to you

This allows you to get around geo-restrictions and access region-specific versions of websites without physically being present in that location.

Types of GEO-targeted Proxies

There are different Geo-targeting proxy types, each with distinct characteristics:

- Residential Proxies: These use IP addresses assigned by Internet Service Providers (ISPs) to real residential devices. They’re the most authentic because websites see them as genuine home internet connections. However, they’re typically slower and more expensive than other options.

- Datacenter Proxies: These come from servers in data centers rather than residential ISPs. They’re faster and cheaper but easier for websites to detect and potentially block since they don’t originate from residential networks.

- Mobile Proxies: These route traffic through mobile carrier networks (4G/5G), using IPs assigned to mobile devices. They’re excellent for accessing mobile-specific content but usually the most expensive option.

The standout feature of GEO-targeted proxies is the level of control they offer over your location. Rather than simply pulling a random IP from a vast pool, you can choose the exact country, state, or even city from which you want your traffic to appear.

For instance, if you connect through a proxy in Germany, websites will recognize you as a German visitor. This opens the door to German pricing, content in the German language, and unique features that might not be available to users with IP addresses from the U.S. or Asia.

Residential vs. Datacenter GEO-targeted Proxies

Quick Comparison:

| Feature | Residential | Datacenter |

| Authenticity | Very high (real ISP IPs) | Lower (data center IPs) |

| Speed | Moderate (residential internet) | Fast (server infrastructure) |

| Block Rate | Low | Higher on protected sites |

| Cost | Higher ($5-15/GB) | Lower ($1-3/GB or per IP) |

| Best For | E-commerce, social media, protected sites | Large-scale scraping, public data, speed-critical tasks |

| Geo-Targeting | Highly accurate (city-level) | Accurate (country/region-level) |

Residential GEO-targeted Proxies

Residential proxies use IP addresses from real devices connected to residential ISPs. When a website checks where your request comes from, it sees a legitimate home internet connection—just like if a regular person was browsing.

Key advantages:

- High authenticity: Websites trust residential IPs because they’re indistinguishable from real users

- Lower block rates: Anti-scraping systems rarely flag residential IPs as suspicious

- Better for sensitive sites: Essential for e-commerce, social media, and sites with strict detection

- Precise GEO-location: Often provide city-level targeting accuracy

Limitations:

- Slower speeds: Residential connections are typically slower than data center infrastructure

- Higher cost: Usually priced per GB of traffic, making them more expensive

- Variable reliability: Connection quality depends on the residential device’s internet connection

Datacenter GEO-targeted Proxies

Datacenter proxies are sourced from commercial servers located in data centers. Unlike residential networks or ISPs, they offer quicker speeds but may not appear as genuine.

Key advantages:

- Faster speeds: Data center infrastructure provides significantly higher bandwidth

- Lower cost: Usually priced per IP or bandwidth, making them budget-friendly for large-scale projects

- Consistent performance: Professional server infrastructure means reliable uptime

- Good for less sensitive sites: Work well on public data sources and less protected websites

Limitations:

- Easier to detect: Websites can identify datacenter IP ranges and may block them

- Higher block rates: More likely to trigger anti-bot measures on protected sites

- Less authentic: Obviously not real user traffic to sophisticated detection systems

Which Proxy Type Should You Choose?

Use residential proxies when you’re scraping:

- E-commerce sites with pricing data

- Social media platforms

- Websites with strong anti-bot protection

- Sites where being blocked would significantly impact your project

Use datacenter proxies when you’re scraping:

- Public data sources (government sites, news sites)

- Large volumes where speed matters

- Budget-constrained projects

- Sites with minimal anti-scraping measures

In my experience, starting with datacenter proxies for testing makes sense—they’re cheaper and faster for development. Then switch to residential proxies for production scraping on protected targets. Some projects even use both: datacenter for basic tasks and residential for the sensitive operations.

Why You Need GEO-targeted Proxies for Web Scraping

Let me share some real scenarios where GEO-targeted proxies become essential tools rather than optional extras.

- Access Authentic Region-Specific Content

Websites don’t just change language based on location — they fundamentally change what they show you.

- E-commerce pricing: Take Amazon, for instance. They show different prices, product availability, and shipping options depending on your location. I once helped a retail client with comparing laptop prices across five different markets and discovered price differences of up to 30% for the same models — not because of currency fluctuations, but due to distinct pricing strategies.

- Travel and hospitality: Hotel booking sites and airlines adjust their pricing based on your location. A hotel room in Bangkok might cost $80 when browsed from Thailand but show $120 when accessed from the US or Europe.

- Job listings: LinkedIn, Indeed, and other job boards only show opportunities relevant to your location. If you’re a recruitment firm gathering global job market data, you need proxies from each target region to see the actual postings local candidates see.

- Content libraries: Streaming services, news websites, and media platforms restrict content by region due to licensing agreements. Without GEO-proxies, you can’t verify what content is actually available in different markets.

- Avoid IP Bans Through Geo-graphic Rotation

When you’re scraping large volumes of data, rotating your requests across different GEO-graphic locations helps you stay under the radar.

So, why is this important? Well, websites are pretty savvy about tracking request patterns from individual IP addresses. It will be blocked if they notice 10,000 requests coming from a single US IP in just an hour.

However, if those requests are spread out over 100 different IPs from various countries—each, each making a reasonable number of requests — it looks just like normal global traffic.

I learned this the hard way on a price monitoring project. Using a single US-based proxy pool, we got blocked within hours. After switching to GEO-distributed proxies rotating between the US, UK, Germany, and Canada, the blocks stopped entirely. The site’s anti-scraping system saw diverse global traffic instead of suspicious concentrated activity.

- Bypass Geo-Restrictions for Global Data Collection

Some websites simply block access from certain countries. News sites might restrict access from regions where they don’t operate. Government databases might only be accessible from within the country. E-commerce sites may redirect foreign visitors to different regional sites.

For instance, a market research client needed to scrape product reviews from a European retailer’s website. The site blocked all non-European IP addresses completely — not just showing different content, but denying access entirely. German residential proxies solved this immediately.

- Verify Localized Marketing and SEO

If your business operates in multiple countries, you need to verify that:

- Your ads appear correctly in different regions

- Search engine results show your content appropriately by location

- Competitor analysis reflects what local customers actually see

- Your website displays properly for international visitors

GEO-targeted proxies let you see your online presence exactly as customers in Tokyo, London, or São Paulo see it — without flying there or setting up VPNs on multiple devices.

- Collect Competitive Intelligence Across Markets

Before understanding how competitors position themselves in different regions, it is essential to see what they show local customers. A competitor might offer aggressive pricing in one market while maintaining premium pricing in another. They might emphasize different features or benefits depending on regional preferences.

With GEO-targeted proxies, you can monitor pricing, competitor websites, messaging, and offerings across every market where they operate—giving you the intelligence to compete effectively in each region.

The key is that GEO-targeted proxies aren’t just about accessing blocked content. They’re about seeing the real, accurate, localized version of the internet that users in each region actually experience. For serious web scraping and data collection, they’re essential infrastructure.

How to Scrape Region-Specific Data in Octoparse (Step-by-Step)

If you’re looking for a straightforward way to implement GEO-targeted scraping without wrestling with complex proxy configurations, Octoparse makes the whole process surprisingly simple.

Why Octoparse Stands Out for GEO-targeted Scraping

I’ve tested dozens of scraping tools over the years, and what impressed me about Octoparse is how it removes the technical friction from proxy management while still giving you the control you need.

Octoparse is a no-code web scraping tool that allows users to extract data from any website quickly and easily. Boasting intuitive point-and-click interface, built-in scraping templates, and cloud-based automation, Octoparse makes it simple to collect, organize, and export web data without any programming skills.

- Built-in residential proxies: Octoparse provides residential IP proxies directly in the platform. You don’t need to source, purchase, and configure third-party proxy services separately. The proxies are already integrated and work out of the box—which saved me hours of setup time on my last project.

- No coding required: The visual workflow builder lets you create scraping tasks by clicking on the data you want. Adding proxy support is as easy as flipping a switch in the task configuration—no need to write authentication scripts or deal with proxy rotation logic.

- Cloud execution with automatic IP rotation: When you run tasks in Octoparse’s cloud, they’re automatically distributed across multiple cloud nodes, each with a unique IP address. This natural IP rotation significantly reduces detection and blocking risks while keeping your local IP completely hidden.

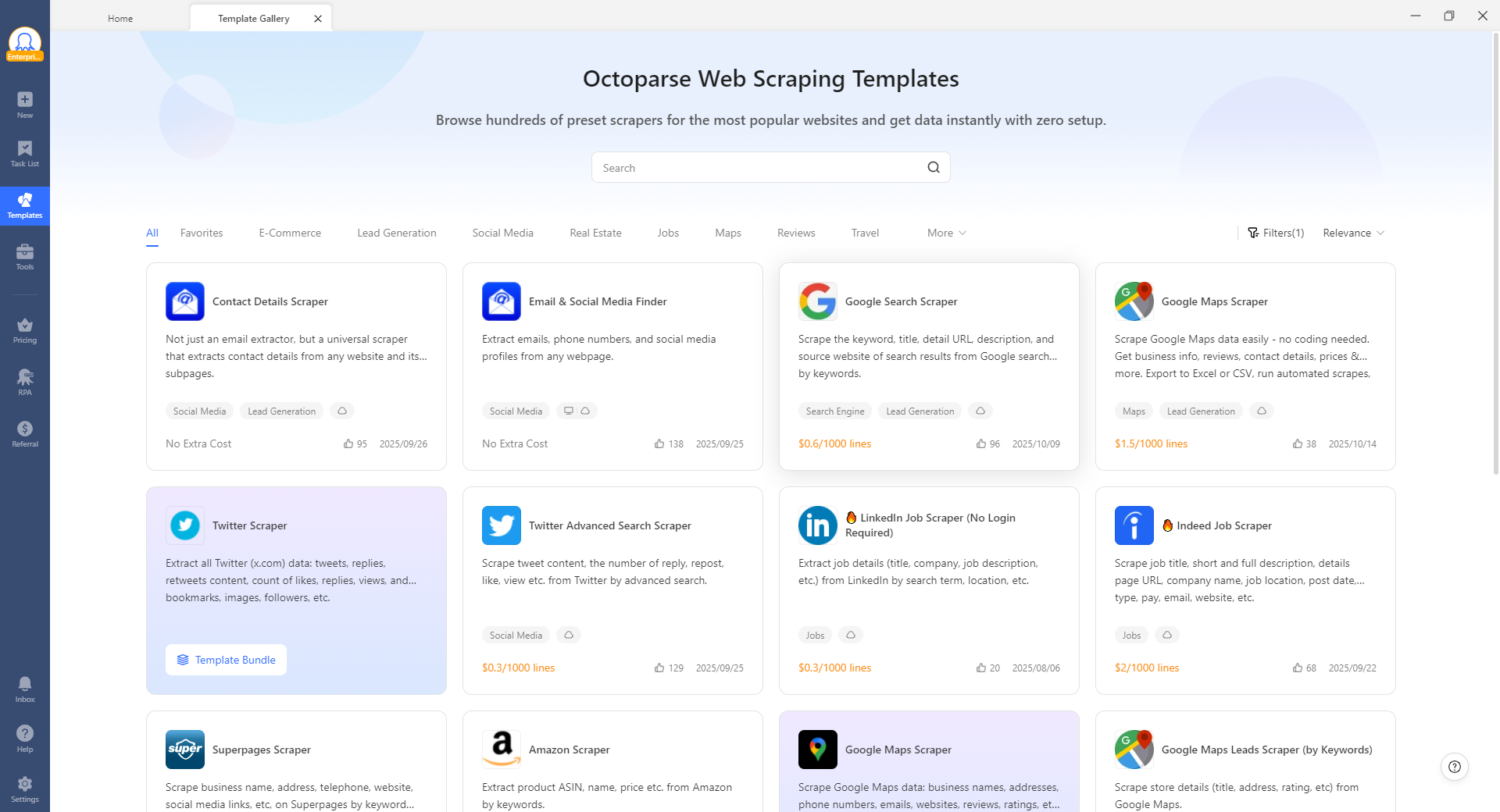

- Template marketplace: Octoparse offers some pre-built templates for popular websites (Amazon, eBay, LinkedIn, etc.) that already include proxy settings optimized for those sites. Just select your target region before running the template.

How to Scrape Data by Country or Region in Octoparse (Step-by-Step)

Let me walk you through the exact process of setting up GEO-targeted scraping in Octoparse. I’ll use a practical example: scraping product prices from an e-commerce site to compare pricing across different countries.

Before you start, make sure you:

- Downloaded Octoparse

- Have an Octoparse account

- Identified your target website and the data you want to extract

- A list of regions/countries you want to scrape from

- Enough credits or subscription level if using cloud features

Step 1: Choose Your Target Regions

First, determine which GEO-graphic locations matter for your project. Consider:

- Market priority: Focus on regions where you do business or want to expand

- Data availability: Some sites may only have meaningful differences in major markets

- Budget constraints: More regions = more proxy usage = higher costs

- Data volume: Scraping from many regions increases total request volume

For my example, I’ll target the US, UK, and Germany to compare electronics pricing.

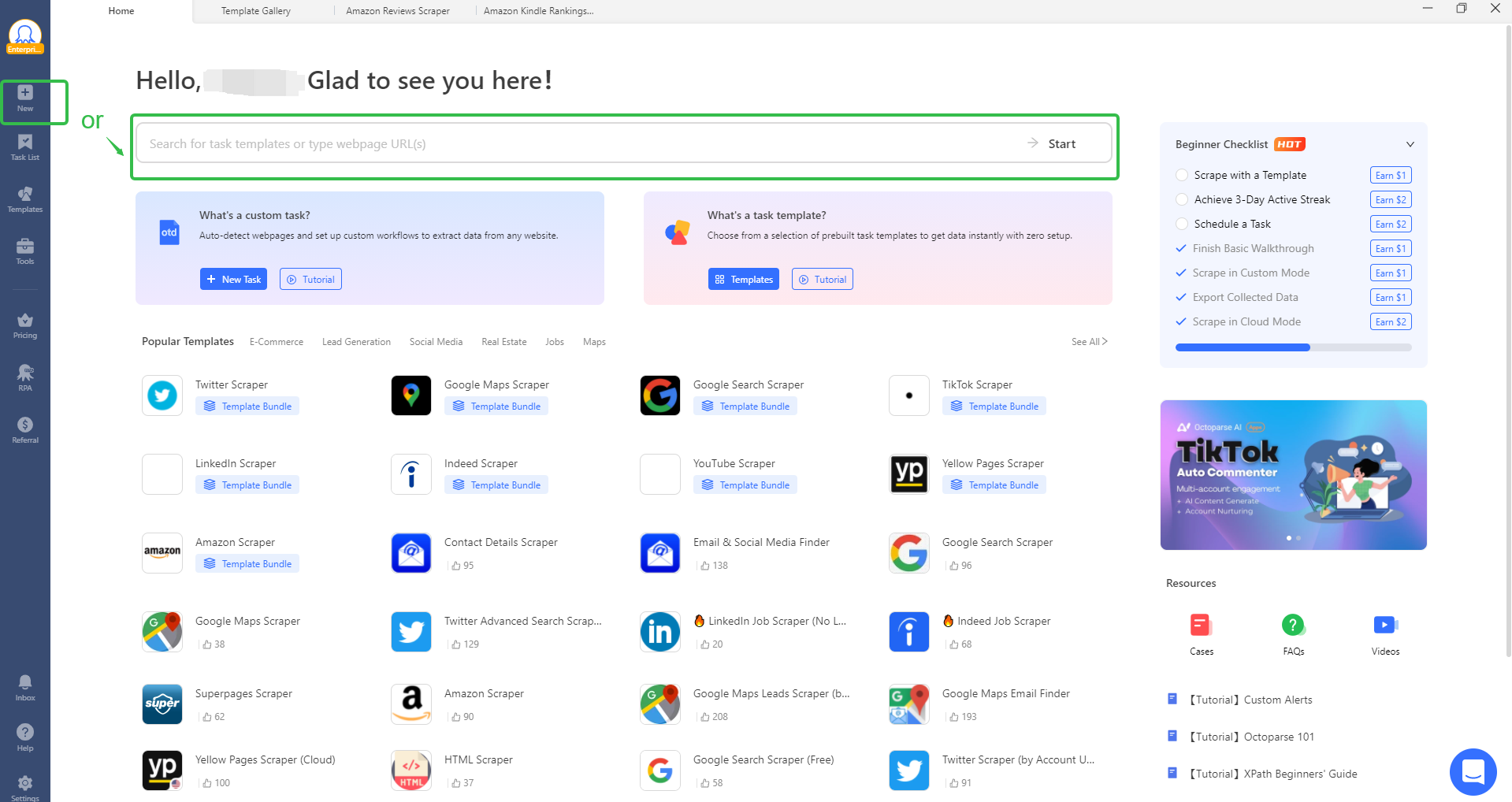

Step 2: Create Your Scraping Task

Option A: Use a Pre-built Template

- Open Octoparse and click “Templates” in the left sidebar

- Search for your target website (e.g., “Amazon,” “eBay”)

- Select the relevant template (e.g., “Amazon Reviews Scraper”)

- Input your search parameters (eg., ASIN, keywords) or URLs

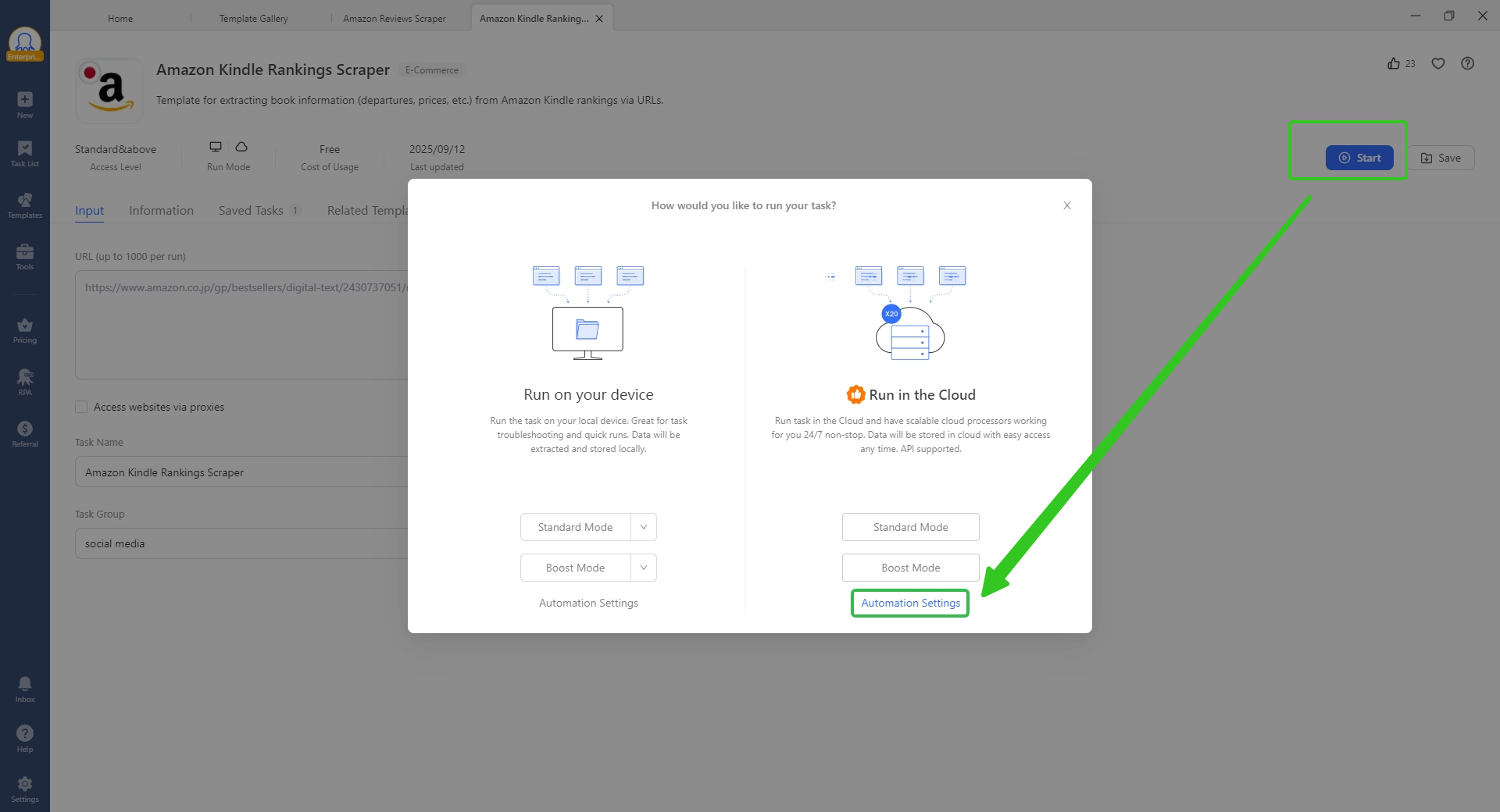

- Some templates allow you to configure the cloud service IP. Here are the steps to follow:

- Click “Start” after you open the target template and then click “Automation Settings” under the configuration “Run in the Cloud” .

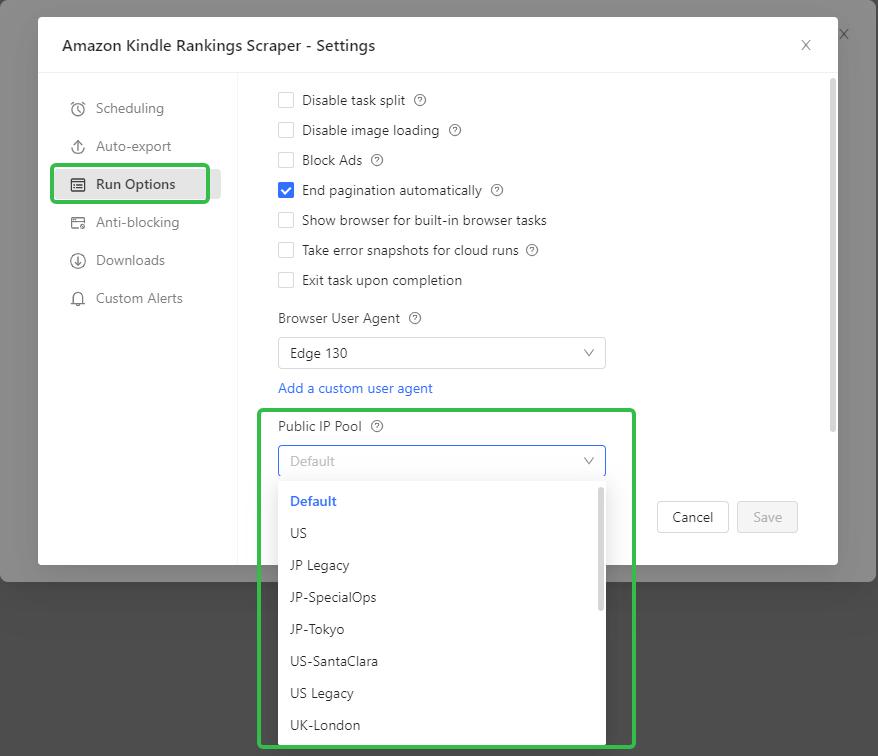

- Click “Run Options” and then select a country from the public IP pools. Don’t forget to save all the settings.

📑Note: In this step, all the settings can only be effective when you run your tasks in the cloud. Some templates can only run on certain IPs. Under such circumstances, users will be specifically reminded to switch IP before running.

Option B: Create a Custom Task (for any website)

- Click “New Task” in Octoparse and enter your target website URL. Or you can directly enter it in the input field at the homepage.

- Click “Start” to load the page in the built-in browser

- Use the auto-detect feature or manually click elements you want to scrape

- Octoparse will generate an extraction workflow

Step 3: Configure GEO-targeted Proxies

This is where the magic happens. Here’s how to set up proxies for your task:

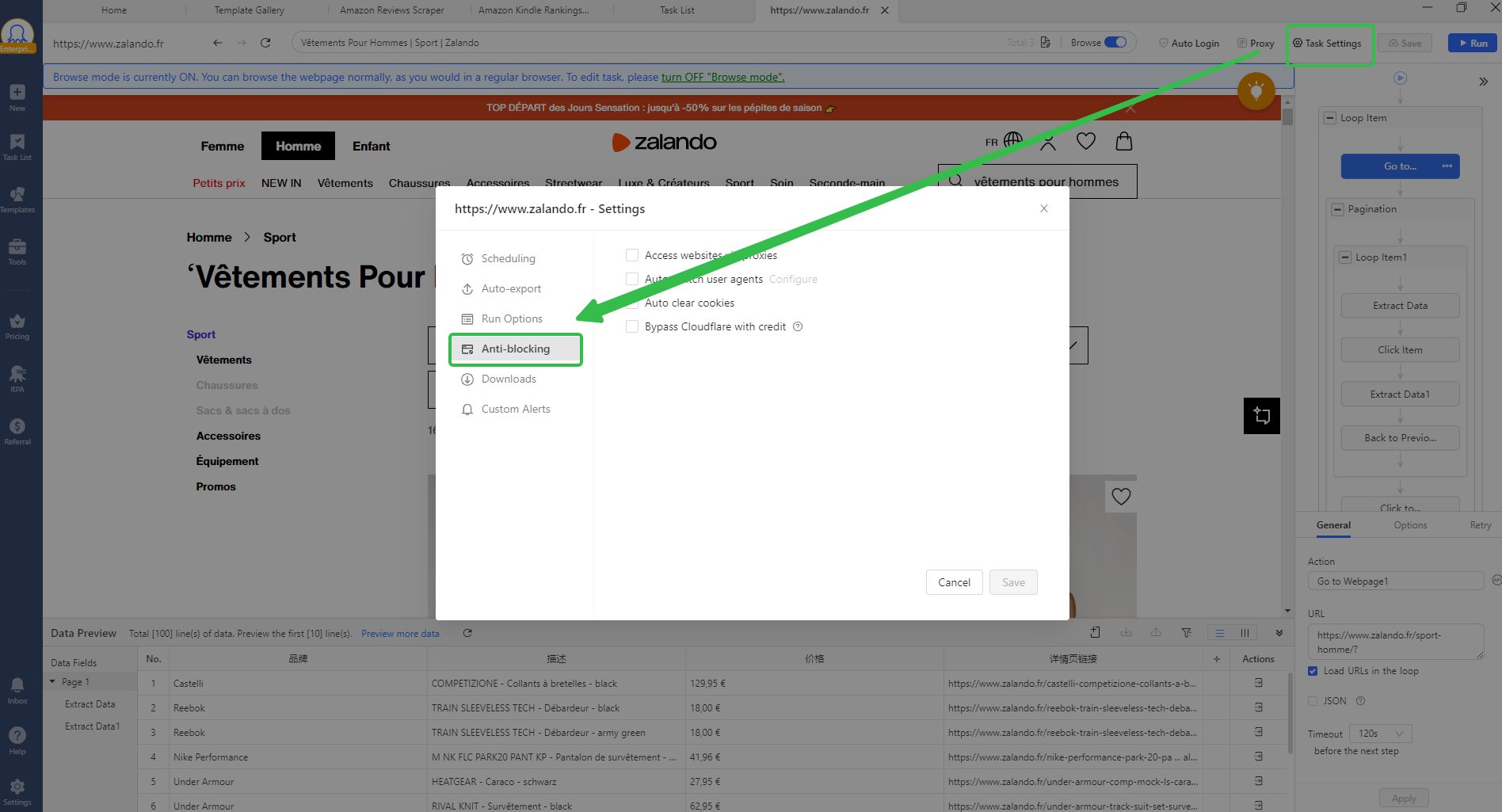

- Open Task Settings: In your task editor, click the “Task Settings” or gear icon

- Navigate to Anti-blocking: Find the “Anti-blocking” or “IP Proxy” section

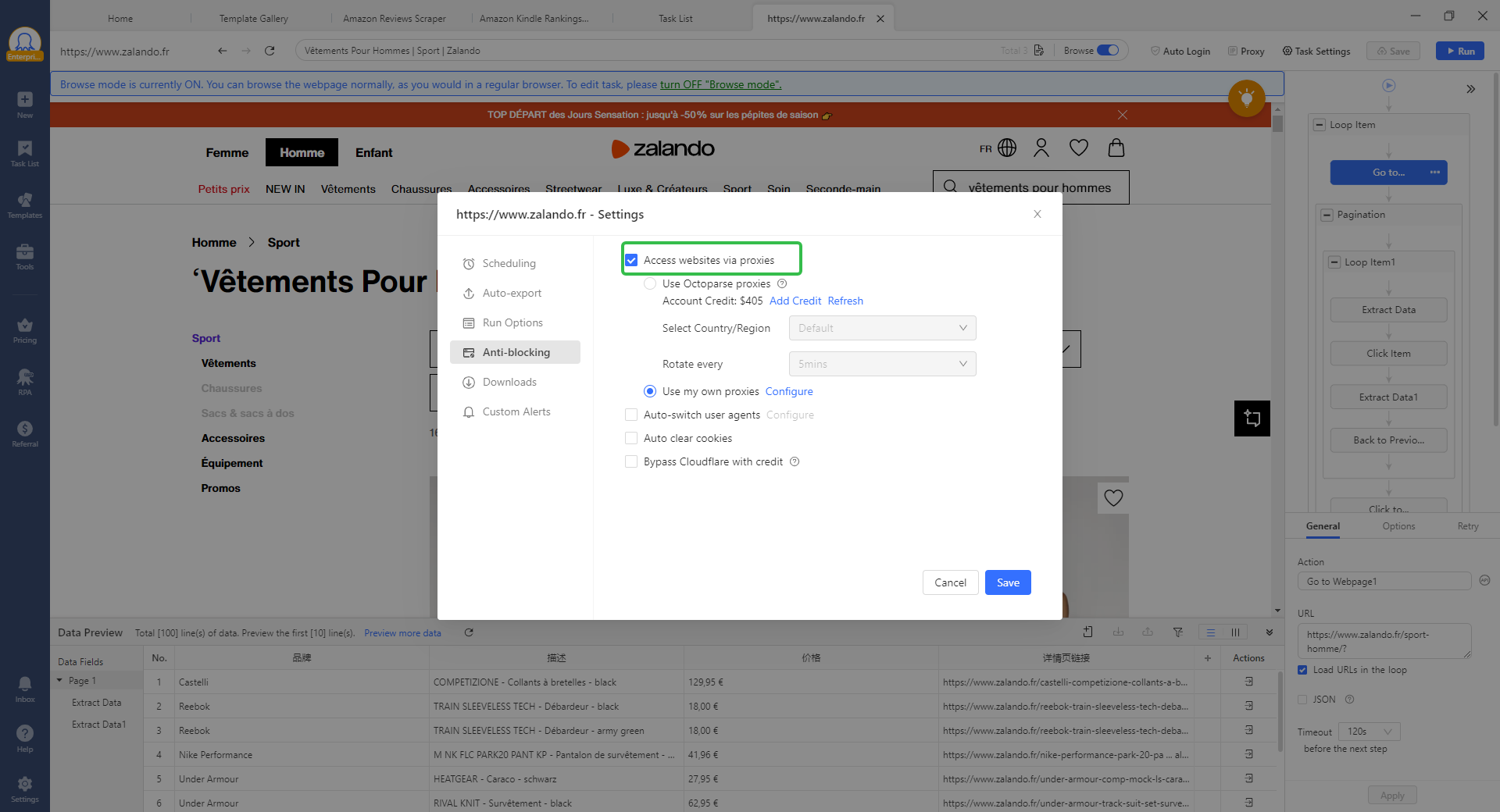

- Enable IP Proxy: Tick “Access websites via proxies”

- Choose Proxy Type:

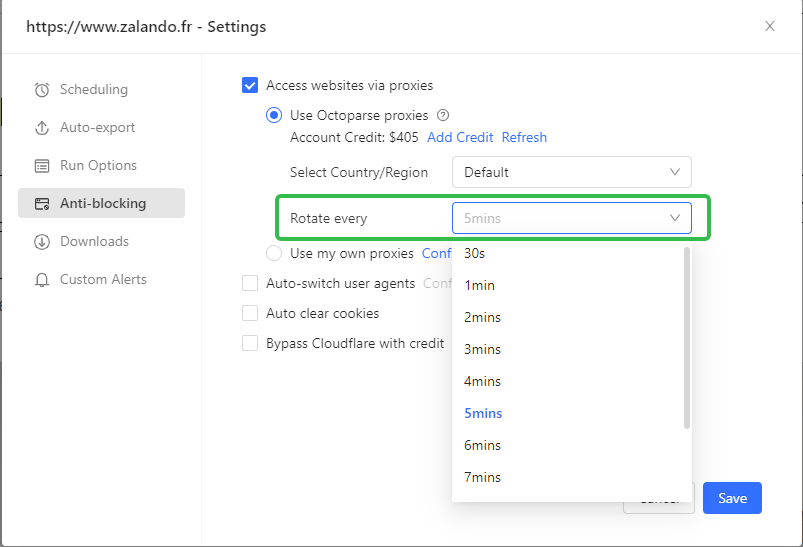

- Octoparse Proxies: Select “Use Octoparse Proxies” for built-in residential IPs and choose your desired country or region from the dropdown menu. You can also choose rotation time for the rotation interval.

- Your own Proxies: If you have third-party proxies, select “Use my own proxies” and configure it

Step 4: Test Your Configuration

Before running a full scrape, always test:

- Run Local Test: Click “Run” to execute the task locally in Octoparse

- Verify Location Detection: Check if the scraped data reflects the target region (prices in correct currency, region-specific content, etc.)

- Check for Blocks: Monitor the execution log for any proxy errors or blocked requests

- Validate Data Quality: Ensure extracted data is complete and accurate

📑Pro tip: Test scraping a few pages first to make sure everything works before scaling up to thousands of pages.

Step 5: Execute Region-Specific Scraping

Once testing confirms your setup works correctly:

For Single Region Scraping:

- Configure your task with the target region’s proxy settings

- Click “Run in Cloud” to execute using Octoparse’s cloud infrastructure

- Monitor progress in the task dashboard

- Export results when complete

For Multi-Region Scraping: You’ll need to create separate task instances for each region:

- Duplicate your base task (right-click task → “Duplicate”)

- Rename each instance with the region (e.g., “Product Prices – US”, “Product Prices – UK”)

- Configure each task with the appropriate regional proxy settings

- Run all tasks simultaneously or schedule them at staggered times

- Export and compare results across regions

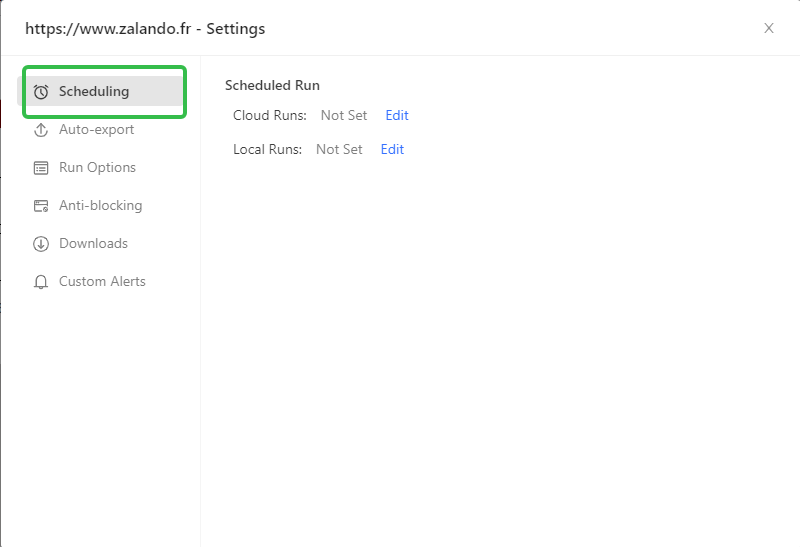

Scheduling Option: If you need regular data updates, use Octoparse’s scheduling feature to automatically run your GEO-targeted tasks daily, weekly, or at custom intervals.

Step 6: Export and Analyze Your Data

After scraping completes:

- Review Extracted Data: Check the data preview to confirm quality

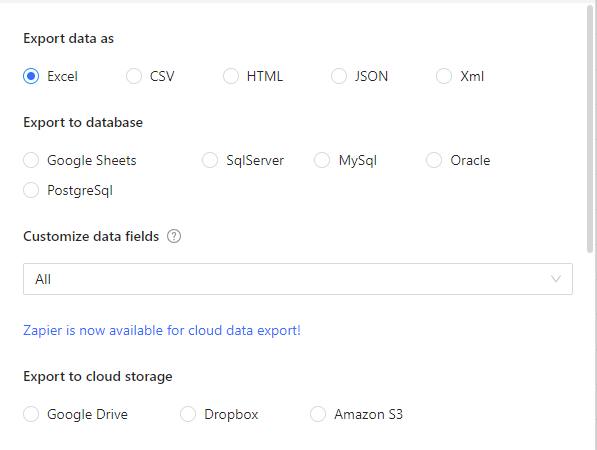

- Export Format: Choose your preferred format (Excel, CSV, JSON, database, HTML, Xml and more)

How to Choose the Right GEO-targeted Proxy

If you’re using custom proxies with Octoparse or any other scraping tool, selecting the right provider is crucial. Here’s what matters most.

Key Selection Criteria

- Proxy Type: Match the proxy to your target—residential for high-security sites (e-commerce, social media), datacenter for public data and large-scale projects.

- Geo-graphic Coverage: Verify actual IP counts in your target locations, not just claimed country coverage. Ask providers: “How many IPs do you have in [specific city/country]?” City-level targeting requires checking if they have substantial IP pools in your exact target cities.

- Performance: Look for sub-2-second response times (residential) or sub-1-second (datacenter), 99%+ uptime, and test real-world performance on your specific targets before committing.

- Rotation Control: Choose rotating proxies for avoiding rate limits or sticky sessions (1-30 minutes) for logged-in scraping and shopping carts. Ensure the provider offers the session control you need.

- Pricing: Residential proxies typically cost $3-15 per GB, datacenter $1-5 per IP monthly. Calculate your expected usage—large pages and images make bandwidth-based pricing expensive fast.

- Reputation: Use providers with ethical IP sourcing (user consent), no-log policies, transparent terms, and responsive support. Avoid vague sourcing claims or “too good to be true” pricing.

Recommended Providers by Use Case

- For Beginners: Smartproxy (user-friendly), Octoparse built-in proxies (zero setup), ScraperAPI (automated handling)

- For E-commerce & High-Protection Sites: Bright Data (largest network, premium pricing), Smartproxy (quality-cost balance), Oxylabs (enterprise reliability)

- For Budget Large-Scale Scraping: Webshare, Proxy-Cheap, Storm Proxies

- For Social Media: Bright Data, Smartproxy, NetNut (non-P2P residential)

Before You Buy

Ask potential providers:

- Actual IP count in your target locations

- Average response time for your target region

- Sticky session duration options

- Success rates on major e-commerce sites

- Trial or money-back guarantee availability

- How they source residential IPs

Reputable providers are upfront and honest. If you get vague answers, that’s a warning sign. For most projects, it’s best to start with Octoparse’s built-in proxies—they’re residential, need no setup, and save you from having to pay for extra subscriptions.

Only consider outside providers if you really need specific targeting or more control. And remember: a $10 proxy that actually works is way better than a $3 proxy that keeps getting blocked.

Conclusion

Geo-targeted proxies are the key to unlocking accurate, localized insights on the web. Whether you’re monitoring regional pricing, tracking global trends, or analyzing market data, they help you see what real users in each region actually experience.

With Octoparse, you can easily integrate GEO-targeted proxies into your scraping workflow — no coding or complex setup required, and scrape data by country or region. Try Octoparse’s built-in proxy features today to start scraping region-specific data safely, efficiently, and at scale.

Turn website data into structured Excel, CSV, Google Sheets, and your database directly.

Scrape data easily with auto-detecting functions, no coding skills are required.

Preset scraping templates for hot websites to get data in clicks.

Never get blocked with IP proxies and advanced API.

Cloud service to schedule data scraping at any time you want.

FAQs about GEO-targeted Proxies

- How do I choose proxy locations for accurate scraping?

Pick proxy IPs that closely match where your target content comes from — ideally, the same country or city. Residential IPs are preferable, because they tend to be trusted more by websites and are less likely to get blocked. Look for providers that let you filter by city, region, or ZIP code.

Make sure you have a large pool of proxies so you can rotate IPs, reducing risk of detection. Also, study how the website delivers localized content (through IP, headers, regional settings) to ensure your proxy matches those signals.

Finally, consider trade-offs: a very local residential proxy may cost more or be slower, so balance accuracy with performance and cost.

- Are there any legal and privacy risks of using GEO-targeted proxies?

Yes — using proxies brings both legal and privacy risks, depending on how and where you use them.

- Legal risks include violating a website’s terms of service, infringing copyright or licensing rules if you access content meant only for certain regions, and running afoul of local or international laws around data protection. Some jurisdictions may penalize bypassing GEO-restrictions or automated scraping of subscription content.

- Privacy risks revolve around what the proxy provider does: some proxies log traffic, intercept or modify data, or have weak security. If you’re sending anything sensitive through a proxy (login info, personal data, etc.), it can be compromised.

To mitigate these risks, use reputable proxy providers, read their policies, avoid collecting sensitive personal data unnecessarily, respect legal requirements (especially privacy laws like GDPR), and always check a target site’s policies before scraping.

- How does the distance between the proxy and the target server affect speed?

The farther the proxy is from the target server (GEO–graphically or in network terms), the slower each request tends to be — you’ll see increased latency. That means longer wait times for responses, reduced throughput (fewer requests per second), more frequent timeouts, and overall slower scraping jobs.

To get better speed, choose proxies located close to the target server. Also consider network-quality: good bandwidth, low packet loss, and stable routing matter just as much as GEO–graphic proximity. Sometimes a slightly more expensive proxy closer in region will save you time and errors in the long run.

- How can I verify that scraped content matches the local version?

You can do this in a few ways:

- Use a local user or a proxy in the same region, browse manually, then compare what you see with what your scraper retrieves.

- Check for locale indicators like currency, date format, language, or region-specific offers/products/ads. If those match, it’s a good sign.

- Capture screenshots (or page renders) for both the local view and the scraped version and compare visually.

- Automate checks: write tests that look for expected strings (like a local currency symbol, localized address, etc.) or use schema checks. Occasionally do manual spot checks to ensure automated methods remain accurate.

- How do I set up city-level GEO-targeted proxies?

Here’s a practical guide:

- Choose a proxy provider that offers city-level specification (or ZIP/postcode if needed).

- In their dashboard or API, select the target country → state/region → city.

- Get those IPs or proxy credentials and configure them in your scraping tool (e.g. Octoparse or others).

- Verify the proxy’s location using an IP lookup service to confirm it resolves to the correct city.

- Decide how you want sessions to behave (sticky IP for multi-request workflows vs rotating IPs).

- Monitor for performance and blockages; city-level proxies may have more limited availability or higher latency, so be ready to adjust.

- How do I detect when a site uses GEO-location headers?

Detecting GEO–location headers helps you understand how sites tailor content based on user region. Here’s how to spot them:

- Use your browser’s network inspector (DevTools). Refresh the page and look in the Response Headers or Request Headers for names like CF-IPCountry, X-Geo-Country, X-Geo-City, or other similar location fields.

- Use tools like cURL or curl -I / curl -v from terminal to fetch just the headers.

- Send requests from proxies in different locations and compare headers. If headers change by region, that’s a sign the site is using those GEO–location headers.

- Inspect CDN / edge provider settings (if known). Many CDNs support inserting GEO–location headers; their documentation often lists these header names.