Today, there is no bigger buzzword than Big Data in commerce. For enterprises, there is no one sector that is not impacted by data analysis. Many investors in the industry have also found ways to reduce risk in the budget system through the use of big data.

Indeed, it does serve better for enterprises in promoting their core competitiveness by analyzing millions of explored data.

Make use of resources and think about your objective

As well known, a reliable budget should be drawn upon varied and huge volumes of scraped data covering well-rounded enterprise expenses. An enterprise need to consider its capacity and resources to make a wiser budget plan. To achieve their follow-up objective, the aspects included in this budget may include :

- Staff salaries & Training

- Advertising & Marketing operation

- Lease & Rental

- Office Supplies & Office maintenance

- Administrative costs and etc

A budget plan based on the integration of varied types of data and further processed in the deep analysis will broaden an enterprise’s vision to make a wiser decision, not only about financial KPI but also marketing strategy, cost control, funding operation, and many other aspects. After these affecting factors are thoroughly deliberated, we could say such a budget plan is more convincing and multi-dimensional on the basis of big data.

Take advantage of data service and reduce your cost

In most cases, retrieving oceans of info should be the paramount thing to step into big data analysis first. In other words, we need to scrape data, which can be also referred to as “crawl data” or “harvest data”, from the web data resource.

To scrape data, there are several options for businesses to pick up. First, you can program using Python, or R to build up a customized crawler. By using Scrapy, a crawling framework developed by Python, users can scrape structured items from web pages. This method should be an easy task for most IT companies, but not as achievable for traditional businesses. Thus, to delve into the revolution of big data, some businesses invest in data scraping tools or services. This will most definitely add up to the total cost since many data service providers charge a relatively high price for an enterprise-level data scraping project.

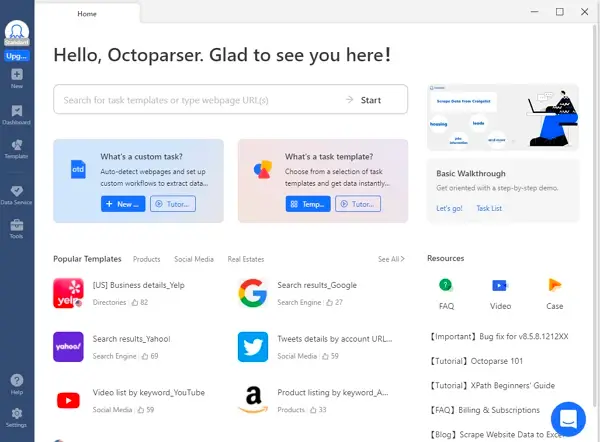

Here comes s good news for those enterprises that don’t want to spend the earth to approach data anymore. In this writing, I’d like to talk about a web scraping tool/software — “Octoparse”, which provides professional scraping/crawling services for users with different scraping requirements.

Scrape without coding

Yes, it is an automated web scraper without any coding skills! I do prefer using this scraper tool compared with many other scrapers since I found its UI a lot more user-friendly. You can easily find the functional operation in the UI without looking around and feeling puzzled.

Octoparse mimics users’ browsing behaviors in the built-in browser and crawls data by following configuration rules. There is a visual workflow designer which you can click & drag to set up your extraction process, enabling anyone to review the process visually.

Octoparse also supports proxy, which is nice because frequently visiting any sites could be deemed as intrusive, thus getting blocked for further scraping. Using the IP Proxy servers provided by Octoparse, tons of IPs will be rotating automatically, thus scraping is possible without being detected preventing your IP from getting blocked.

Let’s take news24 for an example, say you want to learn more about the latest budget news to make a wiser budgeting strategy. As the figure shows below, we can extract the title, published time, and content of the article and whatever data fields we need.

Only four steps are needed to finish configuring a task. After that, you can either extract data with your own machine or set the task to run in the cloud.

The extracted data looks like this. Octoparse is capable of scraping hundreds or more data within minutes. The extracted data can be exported to various formats like CSV, Excel, HTML, and TXT, and there is also an API provided for users to export to any type of database.

Notice that Octoparse provides Cloud Data Service for users, which is only available in Paid Plans. This Cloud Extraction feature will run your tasks in the cloud, getting rid of the trouble of network interruption and long wait times. In most cases, cloud extraction is much faster than Local Extraction, since there are six cloud servers working to run your tasks simultaneously. With cloud extraction, all you need to do is set up a task, pick a good time to run, and be done!