Big Data Make Better Business Decisions

Big data refers to a data set that can not be crawled, managed, and processed, parsed within a certain period of time by conventional software tools. Big data technology is used to deal with big data – crawl, manage, process and parse huge volumes of raw data and quickly access valuable information from a wide variety of data types. The technologies for big data include massively parallel processing (MPP), data mining for power grids, distributed file systems, distributed databases, cloud computing platforms, the Internet, and scalable storage systems.

It sounds great and encouraging that raw big data can be translated into valuable information to better business decisions. Since the volume of data is growing so rapidly and thus will accelerate more opportunities for companies to gain deeper insights and find some new ways to widen profit margins by using the big data extracted.

Nowadays, more and more businesses will rely on data-driven decision-making to improve operation once they use the right big data extraction tools and figure out the right ways to analyze the data by using some big data technologies.

Data Volume Affects Good Decision-making

Usually, companies have already collected the data they need but in most cases, they simply don’t sure whether the result of analysis for the “big data” is reliable enough to support decision making. After all, it’s hard yet important for companies to make a good decision, not to mention a good decision does not always result in a good outcome.

Such a problem often arises because of the volume of the big data extracted before fully exploiting the data.

It’s common for big companies to build an IT development department or hire some big data crawler specialists to extract big data from the internet. However not all the companies can afford it. Usually, dozens of small companies like startup companies don’t have big budgets for big data extraction.

There are many programming languages or resources available on the internet and it’s convenient for a developer to write a web crawler to extract big data from the web. For non-techie guys, they can use big data extraction tools to collect the web data needed. But such tools seem to be designed for experts in web scraping rather than for people with no coding experience, and few tools can completely suit their needs—either for the low cost-effectiveness or the imperfection of a big data extraction tool.

Several Tools For Big Data Extraction

To fully meet your big data extraction needs for your businesses to yield good decision-making, I will suggest some big data extraction tools, especially for tech newbies.

1. Octoparse

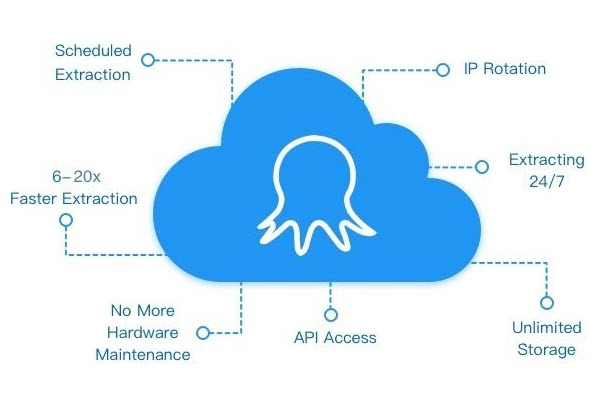

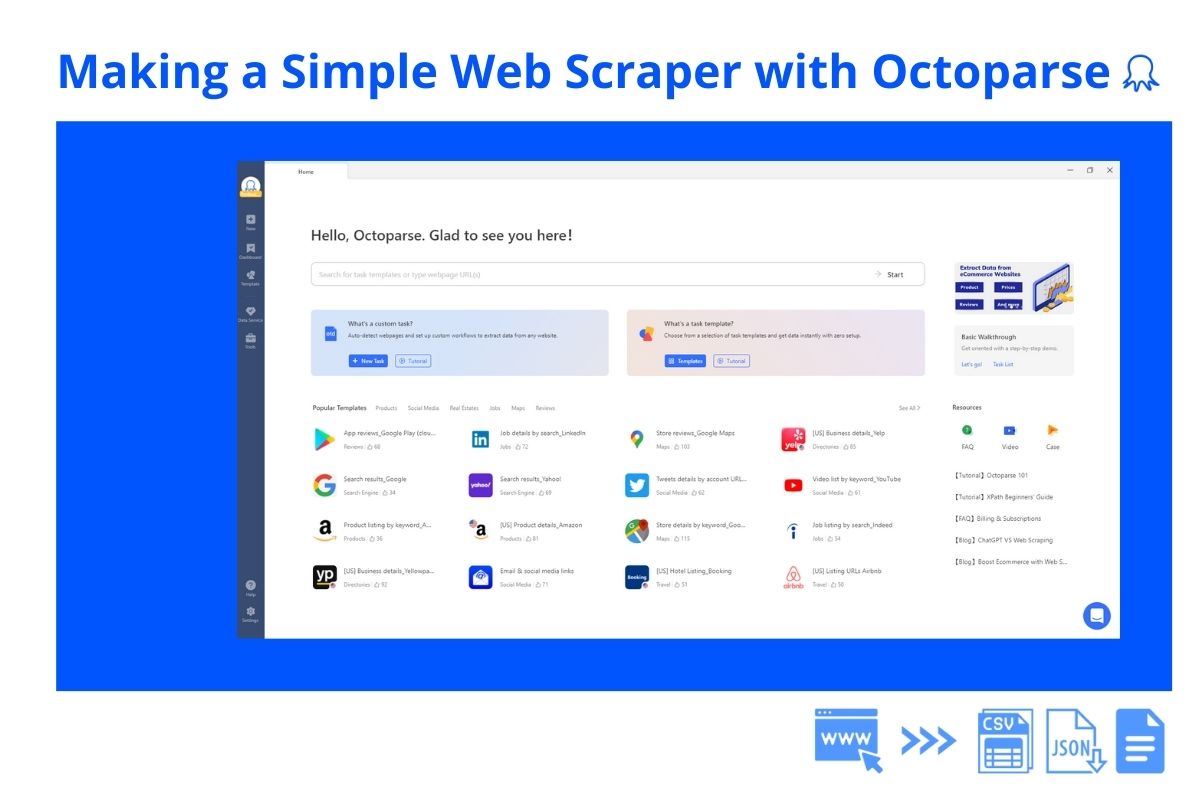

Octoparse is a modern visual big data extraction freeware for Windows systems. Both experienced and inexperienced users would find it easy to bulk extract unstructured or semi-structured information from websites and transform the data into a structured one. The Smart mode will extract data in web pages automatically within a very short time. And it’s easier and faster for a newbie to get data from the web by using the point-&-click interface. It allows you to get the real-time data through Octoparse API. Their cloud service would be the best choice for big data extraction because of the IP rotation and abundant cloud servers.

2. import.io

A platform that converts semi-structured data in web pages into structured data. It offers real-time data retrieval through their JSON REST-based and streaming APIs and can integrate with many programming languages and data manipulation tools, as well as a federation platform which permits more than 100 data sources to be queried at the same time.

3. Mozenda

Mozenda offers web data extraction tool and data scraping tools which make it easy to scrape content from the internet. You can programmatically connect to your Mozenda account by using the Mozenda Web Services Rest API through a standard HTTP request.

4. Webharvy

WebHarvy enables you to easily extract data from websites to your machine and can deal with all website. No programming/scripting knowledge needed. WebHarvy can help you extract data from product listings/eCommerce websites, yellow pages, real estate listings, social networks data, forums, etc. Just click the data you need and it’s unbelievably easy to use. Crawls data from multiple web pages of listings, following each link.

5. UiPath

UiPath is a fully-featured, and extensible freeware for automating any web or desktop application. UiPath Studio Community is free for individual developers, small professional teams, education and training purposes. UiPath permits organizations to make software robots that automate manual, repetitive rules-based tasks.