Businesses today obtain information from a variety of resources, including websites, traditional media, papers, chats, podcasts, videos, etc. Utilizing valuable data from diverse sources enables businesses to make insightful and profitable judgments. The method of gathering important insights from data from different sources is known as data extraction, and the tools employed to do so are known as data extraction tools.

Data extraction can be a tedious task as any organization will struggle to conduct an insightful in-depth evaluation of the data captured. Therefore, data gathering tools were designed to make the method of data extraction smoother. You could make analytical and effective assumptions about a variety of topics by using them.

This article will serve as a guide to give you insights into the data extraction procedure, its types, and its perks. Additionally, we will talk about the top 10 data extraction tools you should not miss.

What Is Data Extraction

An introduction to data extraction

It is the process of gathering information from several sources of data for further visualization and interpretation to obtain insightful corporate data or for storing in a centralized data warehouse. Unstructured, semi-structured, or structured information can be obtained from several sources. In a nutshell, data extraction is a method of obtaining data from one source and transferring it to another, whether it be on-site, cloud-based, or a combination of the two.

To do this, a variety of tactics are used, some of which are complicated and often include manual input. Extraction, transformation, and loading, or ETL, is often the initial phase, unless information is just retrieved for archive needs. This indicates that upon primary collection, information almost always goes through further transformation to make it suitable for subsequent review.

Types of data extraction

Having received a fundamental grasp of how data extraction functions, let us just examine the types of data extraction strategies that are frequently employed in the market. The two primary categories of data extraction techniques are logical and physical which can be further divided into other types.

1. Logical Data Extraction

It is the most used data extraction approach. It can be categorized into two subcategories.

Full Extraction

This process frequently arises during the beginning load. Hence, all the data is simply pulled from the resource in one go. Since this extraction captures all information that is currently accessible on the source system, there is no need to maintain track of the progress after successful extraction.

Incremental Extraction

The delta changes in the data are the focus of this technique. You must first apply complex extraction algorithms to the data sources as a data scientist and maintain track of data improvements and upgrades. The revised data extraction timestamps are collected using this technique.

2. Physical Data Extraction

Logical Extraction may be challenging to use when trying to extract data from outdated data storage systems. This data can only be obtained by physical extractions. It can also be broken down into two categories.

Online Extraction

Direct data extraction from the data source to the appropriate Data Warehouse is possible with this technique. This approach requires a direct link between the source system and the extraction tools in order to function. You can attach it to the transitional system, which is a near exact clone of the source system but with better organized data, rather than directly connecting it to the source.

Offline Extraction

Instead of being directly retrieved from the original source, the data is intentionally processed outside of it in this approach. In this procedure, the data is either organized or may be structured utilizing techniques for data extraction. A flat file, a dump file, or a remote extraction from database transaction logs are a few of the file structures it incorporates.

Benefits of using data extraction

Data extraction tools significantly improve the accuracy of data transfer since they are used mostly without human intervention, which minimizes partiality and mistakes therefore raises the fineness of the data.

“Which data must be extracted” is primarily determined by data extraction tools. This is done while obtaining data from many sources since the tools correctly identify the precise data needed for the action in consideration and leave the rest for further transfers.

The usage of data extraction tools allows organizations to choose the size of the data collection they wish to do. It helps you prevent manually paging through sources to get information, additionally allowing you simply choose how much data is gathered and for what purposes.

10 Best Data Extractors You Should Know

Data extraction tools come in a wide variety which may be used to gather and monitor information about your promotional campaigns. Some are designed for particular sectors, while others focus on the challenges affecting advertisers, and yet others have a far wider range of use. Below we have listed some of the best data extraction tools with their description as well as some features to help you decide which one is best for you.

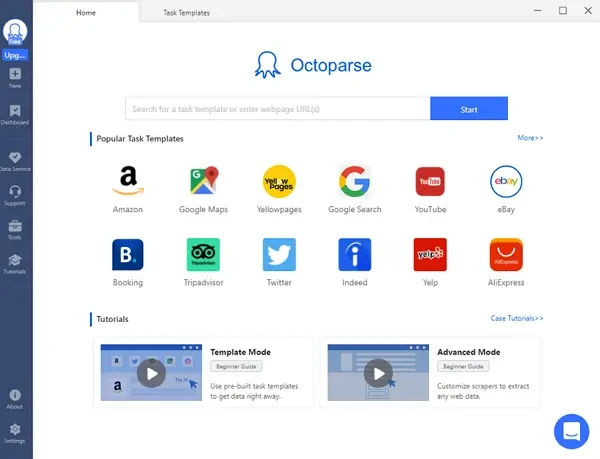

1. Octoparse

Octoparse is a robust web scraper that is available & efficient for both Mac and Windows users. The complete scraping operation is really simple and straightforward since it mimics the actions of a human.

With its distinctive built-in project templates, Octoparse makes it simple for novice users to begin their experiences of scraping. Additionally, it provides free limitless crawls, Regex tools, and Xpath to assist in resolving 80% of data-mismatch issues, even while scraping dynamic pages.

Main Features:

- It is a cloud-based web scraper that allows you to quickly and efficiently retrieve online data without programming.

- You can select from the Free, Standard, Professional, and Enterprise options for different needs.

- It comes with built-in templates for popular websites so that you can finish the scraping within several clicks.

2. Scrape.do

Web scraping using rotating proxies is extremely easy and quick with Scrape.do. All features are simple to use and customizable. You may use proxies to reach the target website and extract the raw data you need by submitting parameters like the URL, Header, and Body to the Scrape.do API. The targeted url will get all request parameters that you give to scrape.do without modification.

Main Features:

- One API end-point is all that Scrape.do provides.

- It is among the most affordable web scraping solutions available.

- All subscriptions include limitless bandwidth.

3. ParseHub

For the purpose of collecting data from the internet, ParseHub is a free web scraper application. The desktop software for this utility is available for download. It offers more functionality than the majority of other scrapers, such as the ability to scrape and download files and photos, as well as CSV and JSON files. It offers free usage but premium features come with paid plans.

Main Features:

- You can utilize the cloud service to store data automatically.

- Get information from maps and tables.

- Accepts infinite scrolling pages.

4. Diffbot

Another tool for extracting data from websites is Diffbot. This information collector is among the best content extractors available today. With the Analyze API functionality, you may use it to automatically identify sites and extract items, articles, debates, videos, or photographs.

Main Features:

- Plans start at $299/mo and there is a 14-day free trial period available.

- You can use visual processing to scrape the majority of websites that don’t use English.

- Structured search to display only results that are relevant.

5. Mozenda

It is a cloud-based web scraping solution. You can execute your own agents using its online portal and agent builder, and you can see and manage results. Additionally, it enables you to publish or export collected data to cloud storage services like Dropbox, Amazon S3, or Microsoft Azure. At optimized gathering servers at Mozenda’s data centers, the data extraction is performed. As a result, this makes use of the user’s local resources and guards against IP address bans.

Main Features:

- Without manually logging into the Web Console, you can manage your agents and data collecting.

- Free, Professional, Enterprise, and High-Capacity are the 4 packages that Mozenda provides.

6. DocParser

This is one of the best document parsers. Data can be extracted from PDF files and exported to Excel, JSON, etc. It transforms data from inaccessible formats into useable representations, like Excel sheets. With the use of anchor keywords, powerful pattern recognition, and zonal OCR technology, Docparser recognises and extracts data from Word, PDF, and image-based documents.

Main Features:

- There are 5 plans available for DocParser: Free, Starter, Professional, Business, and Enterprise.

- Extract the necessary invoice information, and then download the spreadsheet or incorporate it with your accounting system.

7. AvesAPI

In order to scrape structured data from Google Search, developers and agencies can use the SERP (search engine results page) API service called AvesAPI. With the help of its intelligent dynamic network, this web scraper can easily extract millions of keywords. That entails quitting the time-consuming task of manually examining SERP results and bypassing CAPTCHA.

Main Features:

- Real-time access to structured data in JSON or HTML.

- You can try this tool for free. Additionally, the paid plans are quite affordable.

8. Hevo Data

You can duplicate content from any data source with the aid of Hevo Data, a No-code Data Pipeline. Without coding a single line of code, Hevo enhances the data, turns it into an evaluation format, and transfers the data onto the appropriate Data Warehouse. Data is managed safely, reliably, and without any data loss because of its fault-tolerant architecture.

Main Features:

- Utilize logical dashboards that show each pipeline and data flow statistic to keep track of the condition of the pipeline.

- It has three plans: starter, free, and business.

9. ScrapingBee

Another well-known data extraction tool is ScrapingBee. It displays your website as if it were a real browser, allowing you to use the most recent Chrome version to handle dozens of headless instances. Consequently, they assert that dealing with headless browsers like other online scrapers wastes time and consumes CPU and RAM.

Main Features:

- It offers general web scraping operations without being restricted, such as real estate scraping, pricing monitoring, and extracting reviews.

- You can scrape web pages from search results.

- Plans start at $29/m.

10. Scrapy

Scrapy is yet another tool on our list of top web data extraction tools. An interactive and transparent platform called Scrapy was created to collect data from web pages. For Python programmers who seek to create cross-platform web crawlers, it is a framework for web scraping.

Main Features:

- It can be readily expanded without modifying the core because it is designed to be extendable.

- This utility is completely free.

Final Thoughts

The greatest and most well-liked data extraction tools available today, which may be utilized to streamline the extraction process, were thoroughly discussed in this article. Additionally, it highlighted some features and prices of these tools. Selecting the appropriate Data Extraction Tool is an important aspect of the overall data extraction process in any business.