No single firm can survive without data. However, the fact is that we are inundated with data.

Turning data into valuable information stays at the core value for businesses continuing to grow. Business Intelligence comes into place as it converts raw data into actionable insights. How does BI work? I will illustrate with a concrete example from data extraction to data interpretation.

In the following parts, you can learn about the raw data definition and the difference between raw data and information. Also, you can learn how to turn raw data to information with 3 key steps.

What Is Raw Data

Raw data is also called primary data. It is collected from one source that needs to be processed to make it insightful. In business, data can be anywhere. It could be external: data that flows around the web like images, Instagram posts, Facebook followers, comments, and competitors’ followers. Or it could also be internal: data from a business operation system like Content Management System (CMS).

Information is processed and organized data that is presented in form of patterns and trends for better decision-making. I would like to quote Martin Doyle: “Computers need data. Humans need information. Data is a building block. Information gives meaning and context.”

How to Turn Raw Data to Valuable Information

Now you have a general idea of the start and the end, Let’s break down the middle part in details. We will divide the raw data interpretation process into 3 big steps.

Step One: Raw Data Extraction

This is a primary step to make the following possible. It is a zero-to-one process in which we need to start building up blocks.

Data extraction is to retrieve data from multiple resources on the Internet. At this stage, The whole process takes place in three steps:

1. Identify the source: Before we start extraction, it is necessary to make sure whether the source is legal to extract, and the quality of the data. To do that, you can check Terms of Service (ToS) to get detailed information. For example, we all know that LinkedIn has tons of values in regard to sales prospects. LinkedIn thought that too, so they are very strict with any form of scraping technique to access their websites.

2. Start extraction: Once you have the sources ready, you can start extraction. There are many ways you can extract data. A good method nowadays is web scraping. An automated web scraping tool comes in handy as it eliminates the need to write scripts on your own or hire developers. It is the most sustainable and approachable solution for business with a limited budget yet great demand in data.

3. Best Data Extraction Tool (No Coding)

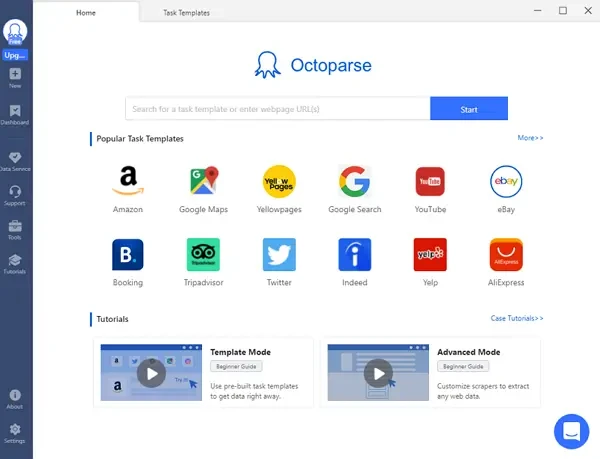

If I have to name a few best web scraping tools, Octoparse stands out as the most intuitive and user-friendly web scraping tool among others.

Its remarkable features such as file downloads, cloud runs, and more enable extracting web data an easy process and bring you the smoothest and reliable scraping experience.

Moreover, it offers more than 50 web scraping templates which are built-in crawlers for you ready to use without any task configuration. It also has an auto-detecting mode which is really good for the beginners who have no idea about coding. So, we recommend you to try it out first before jumping to the next option too soon.

You can finish the data extraction process from any website within the 3 steps below. Or you can find more details from Octoparse user guide page.

1. Paste the target site link to Octoparse main panel and click on the “Start” button. Or you can search any keywords to find the preset templates.

2. You’ll enter the auto-detection more by default. Create a workflow here, and modify the data field you want by dragging or clicking on the workflow/tips on the right panel.

3. Check the workflow and preview the data example to make sure all data you want can be extracted. Save and Run the workflow to start extracting data. You can download the data in Excel or any other format to your local devices.

Here is a sample output, with Octoparse Twitter Scraping Template, I scraped 5k lines of data including the user ID, handles, contents, video URLs, image URLs.

Once you get the data, you can output into the desired format or connect to the analysis platform like PowerBI or Tableau via API.

I am not an expert in PowerBI so I borrowed the Report from Microsoft to make my point.

The idea is that you can monitor social media by scheduling Twitter extraction every day and connect to a preferred analysis platform via API.

Step Two: Data Analytics

In the analysis stage, it is necessary to check the accuracy as the quality of the data may affect the analysis result directly.

During this stage, data will be transmitted to the users in variously reported formats like visualization and dashboard.

Let’s use Power BI to monitor and analyze social media platforms so as to test my marketing strategy, product quality, and control crisis.

This is a sample of how to use POWER BI to present the data into information.

Present by @myersmiguel

Or you can analyze which post gain the most likes.

Okay, now you have the visual dashboard, how can you analyze?

In business, information needs to be interpreted pertaining to the context of the organization.

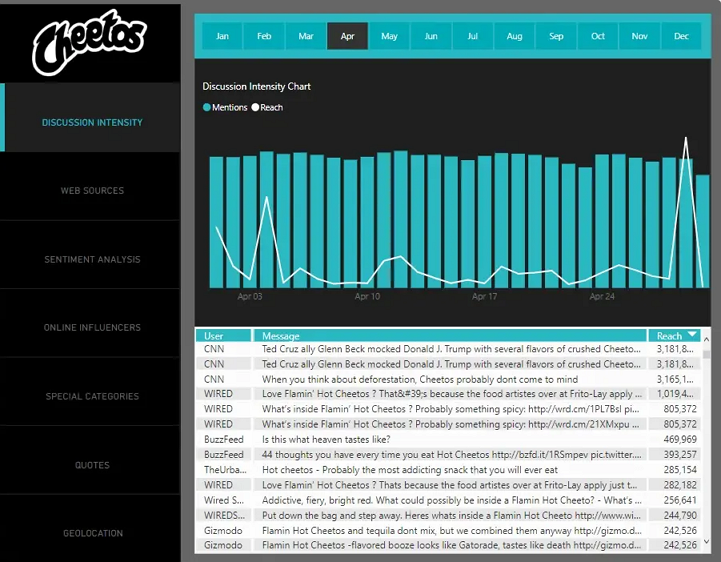

Context is the major component to make actionable insights. Let’s take Cheetos as an example.

You can see the trend line of mentions in April is 50k. The number of mentioned Cheetos in Twitter gain 50k mentions in April It is a huge number but it doesn’t tell anything valuable besides the volume of mentions at that moment.

What if I pull out all the number of mentions from the previous months and compare them with those from this month?

April: 50k mentions

March: 40k mentions

February: 1673 mentions

Now that we provide the context this is how I interpret the information:

Cheetos gains 10k mentions from March to April.

Cheetos gains 38k mentions from Feb to March.

We can tell Cheetos almost got popular overnight. And the word of mouth marketing is successful.

To conclude, we should continue this strategy and let everybody get immersed with Cheetos.

The idea is that data by itself is meaningless.

Most businesses share similar types of data but vary in scales. However, the information they acquire is different. The process of transformation incorporates the context of that organization.

Step Three: Data Storage

Data storage is the key component as businesses rely on it to preserve the data. Data Storage varies in capacity and level of speed.

With the profusion of big data, storage vendors spring up and penetrate the market. This is a list of reliable vendors for your preference.

For web scraping tools like Octoparse, it provides a suite of functions from data extraction from dynamic websites, data cleaning with built-in Regex, data exports into structured formats and cloud storage.

The best part, it can connect to your local database with fewer efforts. Don’t get drown in the data, you need a ride with an intelligent tool.