What Is A Website Ripper

A website ripper is a tool or software that allows users to download an entire website or specific web pages for offline use. It works by copying all of the content from a website, such as HTML files, images, CSS, JavaScript, and other media, allowing the user to view or use the content without needing an active internet connection.

It is popular for the reasons that people can visit the site’s content during offline hours, and download the site as a copy to backup and move the site to another server.

Website rippers are often used for web scraping, archiving, or creating offline versions of websites. They are helpful in situations where you need to access content without an internet connection, or for data extraction for further analysis. However, it’s important to note that website rippers should be used responsibly, as scraping content without permission may violate website terms of service or copyright laws.

In the following parts, you can learn the top 4 website rippers and the best web scraping tool to extract website data in real-time or as scheduled according to your needs.

Best Web Scraping Tool to Extract Data Anytime

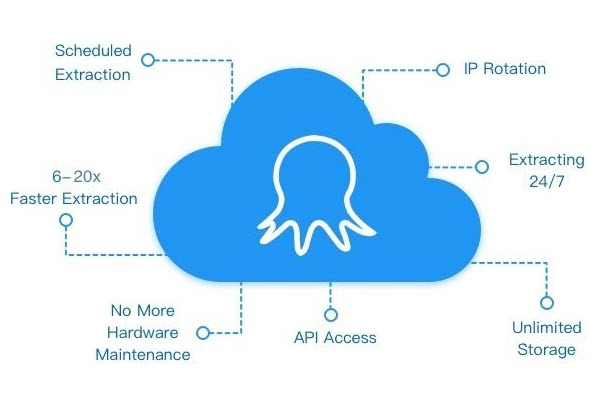

Before learning these website rippers, you can first try the best web scraping tool, Octoparse, which allows you to extract webpage data without any coding. It can help you scrape data in real-time so that you can get fresh data to learn your competitors’ changes. Octoparse has a cloud-based scraping mode, so you can schedule the scraping at any time you want.

To make the data scraping easier, Octoparse has an AI-powered auto-detecting function that can help you recognize the data fields and create a crawler automatically. Advanced functions such as bypassing CAPTCHA, proxies, IP rotation, XPath, etc. are also provided by Octoparse.

Turn website data into structured Excel, CSV, Google Sheets, and your database directly.

Scrape data easily with auto-detecting functions, no coding skills are required.

Preset scraping templates for hot websites to get data in clicks.

Never get blocked with IP proxies and advanced API.

Cloud service to schedule data scraping at any time you want.

What’s more, it provides preset scraping templates for popular websites to collect data within several clicks. You don’t need to download anything and just enter several parameters after previewing the data sample. Click the link below to have a free trail.

https://www.octoparse.com/template/email-social-media-scraper

Simple steps to scrape website data using Octoparse

If you want to make more customization on data scraping, you can try Octoparse desktop application for free. Follow the simple steps below, or move to the Octoparse Help Center to find detailed tutorials.

Step 1: Download Octoparse and sign up an account for free.

Step 2: Open the webpage you need to scrape and copy the URL. Then, paste the URL to Octoparse and start auto-scraping. Later, customize the data fields from the preview mode or workflow on the right side.

Step 3: Start scraping by clicking on the Run button. The scraped data can be downloaded into an Excel file to your local device.

4 Best Website Rippers

1. HTTrack

As a website copier, HTTrack allows users to download a website from the Internet to a local directory, recursively building all directories, and getting HTML, images, and other files from the server to the local computer. For those who want to create a mirror of a website, this web ripper can surely offer a good solution.

🥰Pros:

- Free and open-source

- The interface is user-friendly

- Users can configure the depth of mirroring, decide which files to download, and set bandwidth limits.

- available for Windows, Linux, macOS, and Android.

- It preserves the relative link structure of the original site, which helps users in navigating the mirrored site offline.

- Support existing mirror website updates.

🤯Cons:

- can consume a lot of bandwidth, especially if you are ripping large websites.

- Lack of techniques to tackle some anti-ripper measures deployed by modern websites.

- Downloading entire websites may violate terms of service and copyright laws.

- Cannot rip dynamic content, which leads to incomplete offline content.

- Although HTTrack is functional, it is not updated frequently and is quite old, which can result in compatibility issues with newer websites and technologies.

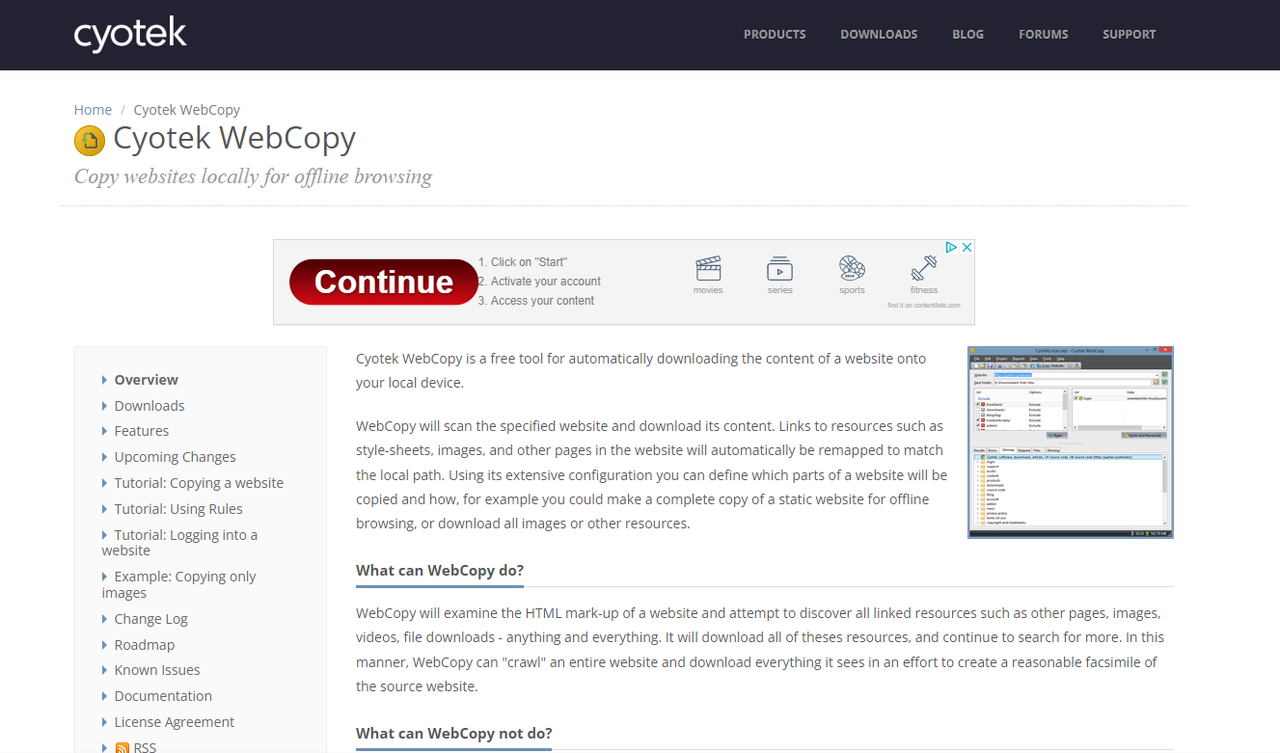

2. Cyotek WebCopy

Cyotek WebCopy is a free website ripper that allows users to download entire websites for offline browsing. It scans a website, copies its structure, and saves HTML, images, and other resources locally. WebCopy can adjust links automatically for seamless offline access and supports custom rules for selective downloading. Ideal for archiving or mirroring websites.

🥰Pros:

- Free of charge and has a user-friendly interface

- Users can specify which websites to rip and customize the scraping rules.

- It has a report showing the structure of the ripped website and its files.

- The tool rewrites links to ensure that the offline copy is fully navigable.

- supports a wide range of protocols including HTTP, HTTPS, and FTP.

- Actively maintained and updated compared to HTTrack.

🤯Cons:

- Cannot scrape dynamic content like Javascript and AJAX.

- Downloading large websites can affect system performance.

- Can consume significant bandwidth if you rip a large website, which is hard for people with limited internet connection.

- Lack of techniques to tackle some anti-ripper measures deployed by modern websites.

- Downloading entire websites may violate terms of service and copyright laws.

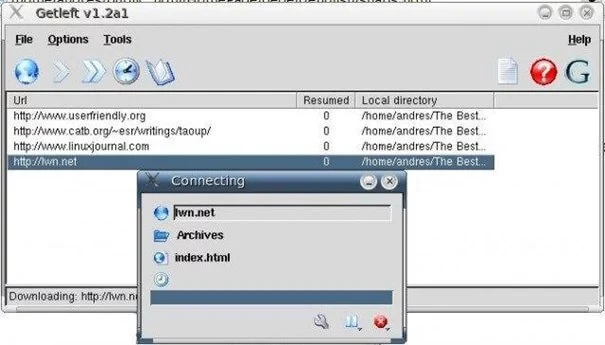

3. Getleft

Though having an outdated interface, this website ripper has all the features of the first two. And what makes it stand out is that it supports multiple languages, making it accessible to a broader audience.

🥰Pros:

- Free and open source

- Multi-Language Support

- Users can choose which files and types of content to download, such as only HTML files, images, or specific directories.

- Maintains the original site’s link structure.

- Runs on multiple operating systems, including Windows, macOS, and Linux.

🤯Cons:

- Outdated Interface

- Cannot deal with dynamic content.

- Detailed analysis reports

- No anti-blocking techniques.

4. SiteSucker

As the name suggests, this site grabber can literally suck a site from the internet by asynchronously copying the site’s webpages, images, PDFs, style sheets, and other files to your local hard drive, duplicating the site’s directory structure. What makes it special is that it is a Macintosh application that is designed exclusively for Mac users.

🥰Pros:

- It can download websites automatically.

- Users can customize download settings.

- It supports resuming interrupted downloads.

- Log and Error Reports.

- Actively maintained and updated

🤯Cons:

- Mac-Only

- Cannot deal with dynamic content.

- Other issues similar to the above mentioned tools.

Final Thoughts

Old website rippers still have their market when people want to back up their website or need structure and more source data analysis. For other purposes, no-code scraping software like Octoparse can meet your needs with its various services and free you from the hassle of information hunting and gathering.

Try Octoparse from now on to make your data scraping process smooth and easy.