As the world is drowning in web information, mining deep into this data provides good potential for gaining valuable insights. There are many social media websites such as Facebook and Twitter that generate huge volumes of real-time micro-blogs via continuous user interactions. It’s obvious these blogs or short posts contain many valuable messages which are worth mining for because these are real-time reports posted by real people participating in the event happening, examples are protests, pandemics, climate disasters, and crimes. Besides as a cue of early event detection, the collective sentiment measured from tweets can often reflect trends in some long-lasting social events such as elections or stock market movements.

There are several methods that we can use to extract the data we need. First, as well known, many websites have provided public APIs which can be used to get access to their data sets, like Twitter REST API, Facebook Graph API, etc. By sending the formatted HTTP request, the data frames will be returned to us in a JSON file. Nevertheless, there are times when certain data fields are not included in the public data sets, thus unable to satisfy people’s need for a complete set of data. In this case, we can build our own extractor by programming using Python or Ruby. Yet, the cost of learning is way high for non-programmers apparently. Thus I would like to propose a new approach – An automated Web Extractor/Scraper, which we can use to extract web data automatically. Users will be set free from complex setting configurations or coding, and a substantial amount of time would be saved for a much more efficient extraction process. There have been many kinds of extraction tools around the web, like Octoparse, Import.io, Mozenda, etc. In this writing, I’d like to share with you about one of these extraction tools – Octoparse based on my own experience. Most important of all, you should really choose an appropriate tool based on your specific extraction requirements. The good news is that many of these tools are really working their way to provide progressively more powerful and easy applications to users.

Octoparse is a Windows-based web data extractor application. It can be used to extract data from most public websites in different fields for various uses. It is becoming popular and widely used. It offers users good guidelines and all-inclusive tutorials that substantially reduce the time needed to master setting up their own extraction tasks. Plus, Octoparse has offered a very user-friendly UI, so that any users, even those without any coding skills, can handle the extraction process rather easily.

There are three modes provided: Smart Mode, Wizard Mode, and Advanced Mode. Octoparse suggests beginners start with the Wizard mode for learning purposes. To meet more complicated and large-scale scraping needs, Octoparse has provided the Advanced mode for users to crawl the data they need. I strongly suggest you finish all the training sessions before moving to the Advanced Mode. I personally found it really helpful. To start a task in the Advanced Mode, choose New Task (Advanced Mode) as shown below, and all the advanced features will be available.

The workflow for the Advanced Mode is rather intuitive. There are tips for each function and the icons and operations are quite straightforward and self-explanatory. By simulating and learning a series of human web browsing behaviors, like opening a web page in the built-in browser, pointing and clicking the web elements by selecting the related options in the pop-up designer window, Octoparse turns repetitive manual extraction work into automated web extraction process and retrieve the structured data users need.

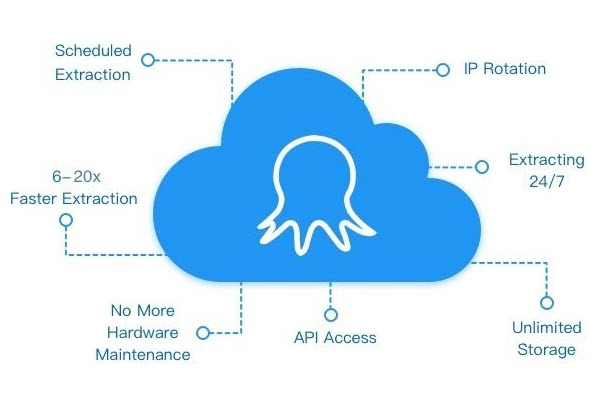

Octoparse is capable of extracting from websites that adopt serious anti-scraping measures by rotating anonymous HTTP proxy servers. With the Octoparse Cloud Service, more than 500 3rd party proxies are used for automatic IP rotation. For local extraction, you can also add a list of external proxy addresses manually for automatic rotation. This way, data can be extracted without the risk of getting the IP blocked.

Additionally, Octoparse provides Cloud Service for faster extraction on large scales as it utilizes multiple cloud servers to run the tasks simultaneously based on distributed computing. To use the Cloud Service, You will need to upgrade your account to Standard or Pro. Once upgraded, you can schedule your cloud-based tasks, leave all the work to the cloud and come back for a complete set of data. It should be noted here that Standard accounts are limited to 6 cloud servers working concurrently and 14 for Pro accounts.